Research Demos

The following Research Demos have been accepted for publication at IEEE VR 2015:

- Research Demo 1: Non-Obscuring Binocular Eye Tracking for Wide Field-of-View Head-mounted-Display

- Research Demo 2: Dynamic 3D Interaction using an Optical See-through HMD

- Research Demo 3: Laying out spaces with virtual reality

- Reseach Demo 4: Three-dimensional VR Interaction Using the Movement of a Mobile Display

- Research Demo 5: Towards a High Resolution Grip Measurement Device for Orthopaedics

- Research Demo 6: Aughanded Virtuality - The Hands in the Virtual Environment

- Research Demo 7: Never Blind VR

- Research Demo 8: Magic Pot 360: Free Viewpoint shape Display

- Research Demo 9: Various Forms of Tactile Feedback Displayed on the Back of the Tablet

- Research Demo 10: Presentation of Virtual Liquid by Modeling Vibration of a Japanese Sake Bottle

- Research Demo 11: Walking recording and experience system by Visual Psychophysics Lab

- Research Demo 12: Live Streaming System for Omnidirectional Video

- Research Demo 13: Blind in a Virtual World

- Research Demo 14: Exploring Virtual Realityand Prosthetic Training

- Research Demo 15: VR based Surgical Training

- Research Demo 16: A Multi-Projector Display System of Arbitrary Shape, Size and Resolution

- Research Demo 17: A 3D Heterogeneous Interactive Web Mapping Application

- Research Demo 18: Virtual Reality toolbox for experimental psychology – Research Demo

- Research Demo 19: Augmented Reality maintenance demonstrator

- Research Demo 20: Augmented reality for maintenance application on a mobile platform

- Research Demo 21: Underwater Integral Photography

- Research Demo 22: Epilogi in Crisis

Research Demo 8

MagicPot360: Free Viewpoint shape Display Modifying the Perception of shape

Yuki Ban, Takuji Narumi, Tomohiro Tanikawa, and Michitaka Hirose

Graduate School of Information Science and Technology, The University of Tokyo

Abstract: In this paper we developed the free-viewpoint curved surface shape display, using the effect of visuo-haptic interaction. In our research, we aim to realize the simple mechanic visuo-haptic system with which we can touch various objects with our real hands. We proposed the free-viewpoint shape display system in which users can touch a virtual object with various shape through the tablet device, while walking around the object. The system figured the difference of shape between the real object and the virtual one from the current viewpoint, and calculates the amount of displacement of the touching hand image to fit to the virtual object, in real time. This modification of the movement of a hand image evokes the effect of the visuo-haptic interaction, and enable users to feel touching various shapes from various viewpoints, although actually users touch a physically static object.

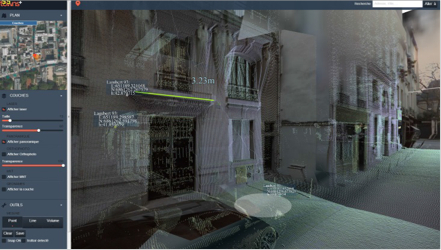

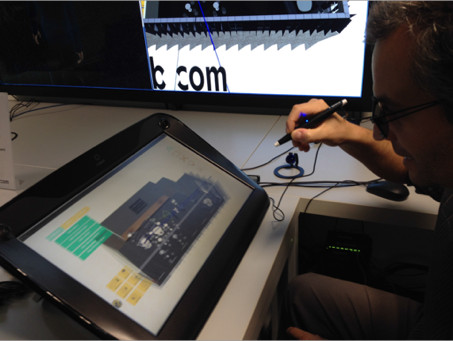

Research Demo 17

3D Heterogeneous Interactive Web Mapping Application

Quoc-Dinh Nguyen, Alexandre Devaux, Mathieu Bredif and Nicolas Paparoditis

Université Paris-Est, IGN, SRIG, MATIS

Abstract: The internet browsers nowadays show incredible possibilities with HTML5. It makes it possible to use all the power of your device such as the GPU and all its sensors, GPS, accelerometer, camera, etc. The ability to put hardware-accelerated 3D content in the browser provides a way for the creation of new web based applications that were previously the exclusive domain of the desktop environment. This paper introduces a novel implementation of a 3D GIS WebGL-based navigation system which allows end-users to navigate in a 3D realistic and immersive urban scene, to interact with different spatial data such as panoramic image, laser, 3D-city model, and vector data with modern functionalities such as using your smartphone as a remote, render for 3D screen and make the scene dynamic.

Research Demo 5

Towards a High Resolution Grip Measurement Device for Orthopaedics

Marc R. Edwards Peter Vangorp Nigel W. John

Visualisation and Medical Graphics Laboratory, Bangor University, United Kingdom

Abstract: We are developing a novel device for measuring hand power grip using frustrated total internal reflection of light in acrylic. Our method uses a force sensitive resistor to calibrate the force of a power grip as a function of the area and light intensity. This research is work in progress but results so far augur well for its applicability in medical and other application areas. The grip measurement device allows the patient and doctor to see the change in grip over time and projects this information directly onto the back of the patient's hand.

Research Demo 6

Aughanded Virtuality - The Hands in the Virtual Environment

Tobias Günther Ingmar S. Franke Rainer Groh

Technische Universität Dresden

Abstract: The leading motive of the research demo is the utilization of the Augmented Virtuality technology and in particular the egocentric representation of real body parts in virtual and immersive environments. In this context, a prototypical application was developed, which superimposes the current view of the user’s hands on the virtual scene in real-time. This is achieved in form of a captured video stream. Advantages compared to virtual avatars arise from the detailed and individual representation of the user’s body and the saving of complex tracking hardware. Due to the integration of a toolbox, the visual appearance of the video overlay is highly customizable.

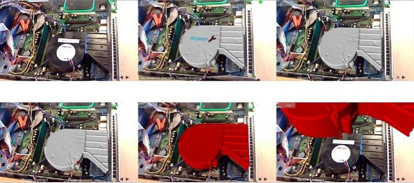

Research Demo 19

Augmented Reality maintenance demonstrator and associated modelling

V. Havard, D. Baudry, A. Louis, B. Mazari

LUSINE and IRISE laboratories, CESI

Abstract: Augmented reality allows to add virtual object in real scene. It has an increasing interest last years since mobile device becomes performant and cheap. The augmented reality is used in different domains, like maintenance, training, education, entertainment or medicine. The demonstrator we show is focused on maintenance operations. A step by step process is presented to the operator in order to maintain an element of a system. Based on this demonstration, we will explain the modelling we propose allowing describing an entire maintenance process with augmented reality. Indeed it is still difficult creating augmented reality application without computer programming skills. The proposed model will allow to create an authoring tool - or to plug to an existing one - in order to create augmented reality process without deep computer programming skills.

Research Demo 10

Presentation of Virtual Liquid by Modeling Vibration of a Japanese Sake Bottle

Sakiko Ikeno1, Ryuta Okazaki12, Taku Hachisu12, Hiroyuki Kajimoto13

1The University of Electro-Communications, 2JSPS Research Fellow, 3Japan Science and Technology Agency

It is known that visual, auditory, and tactile modalities affect the experiences of eating and drinking. One such example is the “glug” sound and vibration from a Japanese sake bottle when pouring liquid. Our previous studies have modeled the wave of the vibration by summation of two decaying sinusoidal waves with different frequencies. In this paper, to enrich expression of various types of liquid, we included two new properties of liquid: the viscosity and the residual amount of liquid, both based on recorded data.

Research Demo 11

Walking recording and experience system by Visual Psychophysics Lab

Atsuhiro Fujita, Shohei Uedam, Junki Nozawa

Graduate School of Engineering Toyohashi University of Technology

Koichi Hirota

Interfaculty Initiative in Information Studies The University of Tokyo

Yasushi Ikei

Faculty of System Design Tokyo Metropolitan University

Michiteru Kitazaki

Department of Computer Science and Engineering Toyohashi University of Technology

Abstract: We aim to develop a virtual-reality system that records a person's walking experience and gives other users the experience of his/her walking. We recorded stereo motion images of video cameras on a person's forehead with synchronous acceleration data of ankles. Then, we presented stereo motion images on a HMD with synchronous vibrations on soles of observer's feet. Observers reported better experience of vection, walking, and tele-existence from stereo images with vibrations than without vibrations. We recorded walking experiences of different body sizes including a child (130 cm tall) and those of a dog. Observers can partly experience child's walking and even dog's running.

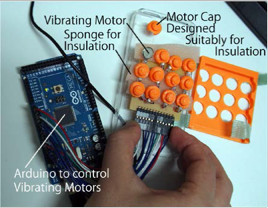

Research Demo 9

Various Forms of Tactile Feedback Displayed on the Back of the Tablet: Latency Minimized by Using Audio Signal to Control Actuators

Itsuo Kumazawa*, Kyohei Sugiyama, Tsukasa Hayashi, Yasuhiro Takatori and Shunsuke Ono

Imaging Science and Engineering Laboratory, Tokyo Institute of Technology

Abstract: The front face of the tablet style smartphone or computer is dominated by a touch screen. As a finger operation on the touch screen disturbs its visibility, it is assumed a finger touches the screen instantly. Under such restriction, use of the rear surface of the tablet for tactile display is promising as the fingers constantly touch the back face and feel the tactile information. In our presentation, various tactile feedback mechanisms implemented on the back face are demonstrated and the latency of the feedback and its effect on the usability are evaluated for different communication means to control actuators such as wireless LAN, Bluetooth and audio signals. It is shown that the audio signal is promising to generate quick tactile feedback.

Research Demo 3

Laying out spaces with virtual reality

Morgan Le Chénéchal, Jérémy Lacoche, Cyndie Martin, Jérôme Royan

IRT

Abstract: When dealing with real estate business, it is quite difficult for estate agents to make customers understand the potential and the volumes of free spaces. Thus, we propose an application that aims to solve these issues based on a laying out scenario in which a seller and a customer collaborate. As the roles of both users are different, we propose an asymmetric collaboration where the two users do not use the same interaction setup and do not benefit from the same interaction capabilities.

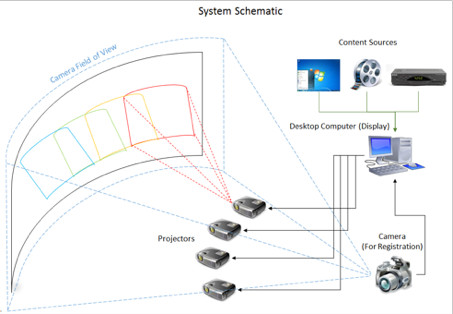

Research Demo 16

A Multi-Projector Display System of Arbitrary Shape, Size and Resolution

Aditi Majumder, Duy-Quoc Lai, Mahdi Abbaspour Tehrani

University of California, Irvine

Abstract: In this demo we will demonstrate integration of general content delivery from a windows desktop to a multi-projector display of arbitrary, shape, size and resolution automatically calibrated using our calibration methods. We have developed these sophisticated completely automatic geometric and color registration techniques in our lab for deploying seamless multi-projector displays on popular non-planar surfaces (e.g. cylinders, domes, truncated domes). This work has gotten significant attention in both VR and Visualization venues in the past 5 years and this will be the first time such calibration will be integrated with content delivery.

Research Demo 13

Blind in a Virtual World: Mobility-Training Virtual Reality Games for Users who are Blind

Shachar Maidenbaum, Amir Amedi

Hebrew University of Jerusalem, ELSC, IMRIC, Israel

Abstract: One of the main challenges facing the practical utilization of new assistive technology for the blind is the process of training. This is true both for mastering the device and even more importantly for learning to use it in specific environments. Such training usually requires external help which is not always available, can be costly, and attempts to navigate without such preparation can be dangerous. Here we will demonstrate several games which were developed in our lab as part of the training programs for the EyeCane, which augments the traditional White-Cane with additional distance and angles. These games avoid the above-mentioned problems of availability, cost and safety and additionally utilize gamification techniques to boost the training process. Visitors to the demonstration will use these devices to perform simple in-game virtual tasks such as finding the exit from a room or avoiding obstacles while wearing blindfolds.

Research Demo 21

Underwater Integral Photography

Nahomi Maki, Kazuhisa Yanaka

Kanagawa Institute of Technology

Abstract: A novel integral photography (IP) system in which the amount of popping out is more than three times larger than usual is demonstrated in this study. If autostereoscopic display is introduced into virtual reality, IP is an ideal candidate because not only the horizontal but also the vertical parallax can be obtained. However, the amount of popping out obtained by IP is generally far less than that obtained by head-mounted display because the ray density decreases when the viewer is distant from the fly’s eye lens. Although a solution is to extend the focal length of the fly’s eye lens, this lens is difficult to manufacture. We address this problem by simply immersing the fly’s eye lens into water to extend the effective focal length.

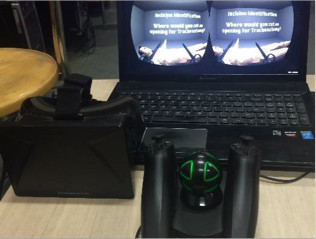

Research Demo 15

Low Cost Virtual Reality for Medical Training

Aman S. Mathur

Jaipur Engineering College & Research Centre

Abstract: This demo depicts a low cost virtual reality set-up that may be used for medical training and instruction purposes. Using devices such as the Oculus Rift and Razer Hydra, an immersive experience, including hand interactivity can be given. Software running on a PC integrates these devices and presents an interactive and immersive training environment, where trainees are asked to perform a mixed bag of both, simple and complex tasks. These tasks range from identification of certain organs to performing of an actual incision. Trainees learn by doing, albeit in the virtual world. Components of the system are relatively affordable and simple to use, thereby making such a set-up incredibly easy to deploy.

Research Demo 7

“Never Blind VR” Enhancing the Virtual Reality Headset Experience with Augmented Virtuality

David Nahon, Geoffrey Subileau, Benjamin Capel

Dassault Systèmes, Passion for Innovation Institute, Immersive Virtuality (iV) Lab

Abstract: In this demo, we share our findings in building real-time 3D experiences with consumer headsets so as to go beyond the first person shooter gaming usage for which they are designed. We address the key problems of such user experiences which are to isolate the user from his own body, have him lose contact with other people in the room and with the real world. To solve those issues we use an off-the-shelf Kinect for Windows v2 to inject some reality in the virtuality.

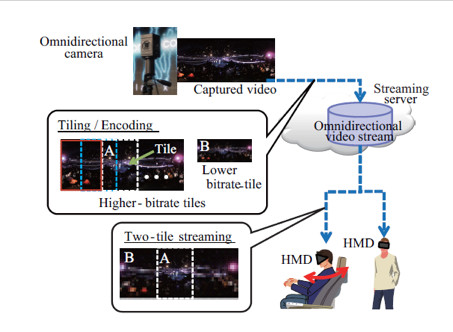

Research Demo 12

Live Streaming System for Omnidirectional Video

Daisuke Ochi, Akio Kameda, Yutaka Kunita Akira Kojima

NTT Media Intelligence Laboratories

Shinnosuke Iwaki

DWANGO Co., Ltd.

Abstract: NTT Media Intelligence Laboratories and DWANGO Co., Ltd. have jointly developed a virtual reality system that enables users to have an immersive experience visiting a remote site. This system makes it possible for users to watch video content wherever they want to watch it by using interactive streaming technology that selectively streams the user’s watching section at a high bitrate in a limited network bandwidth. Applying this technology to omnidirectional video allows users to experience feelings of presence through the use of an intuitive head mount display. The system has also been released on a commercial platform and successfully streamed a real-time event. A demonstration is planned in which the details of the system and the streaming service results obtained with it will be presented.

Research Demo 22

Επιλογή* in Crisis**

*επιλογή = επί (= on, for) + λόγος (= reason, cause, speech) επιλογή (= choice) | **crisis = κρίση (= a time of intense difficulty, judgment)

Prof. Manthos Santorineos, Dr. Stavroula Zoi, Dr. Nefeli Dimitriadi, Taxiarchis Diamantopoulos, John Bardakos, Christina Chrysanthopoulou, Ifigeneia Mavridou, Anna Meli, Nikos Papadopoulos, Argyro Papathanasiou, Maria Velaora

Greek-French Master «Art, virtual reality and multiuser systems of artistic expression» Athens School of Fine Arts

Abstract: “Επιλογή in Crisis” is a work in progress that has been developed by the research group of the Greek-French Master entitled "Art, virtual reality and multiuser systems of artistic expression", in a collaboration between the Athens School of Fine Arts and the University Paris8 Saint-Denis. It concerns an interactive project which is in-between a research tool and experimental game, that takes place in a virtual reality environment. It aims to immerse the player inside a system in crisis, so that he is not a mere spectator but feels that he shares responsibility for the crisis and has to act to resolve it. The actions of the player are measured and “judged” (by the game mechanism itself), thus determining the stability or instability of the system.

Research Demo 14

Exploring Virtual Reality and Prosthetic Training

Mr Ivan Phelan

Culture, Communication and Computing Research Institute Sheffield Hallam University Sheffield, United Kingdom

Dr Madelynne Arden

Faculty of Development and Society Sheffield Hallam University Sheffield, United Kingdom

Mrs Carol Garcia

Faculty of Health and Wellbeing Sheffield Hallam University Sheffield, United Kingdom

Dr. Chris Roast

Culture, Communication and Computing Research Institute Sheffield Hallam University Sheffield, United Kingdom

Abstract: Working together with health care professionals and a world leading bionic prosthetic maker we created a prototype that aims to decrease the time it takes for a transradial amputee to train how to use a Myoelectric prosthetic arm. Our research indicates that the Oculus Rift, Microsoft’s Kinect and the Thalmic Labs Myo gesture control armband will allow us to create a unique, cost effective training tool that could be beneficial to amputee patients.

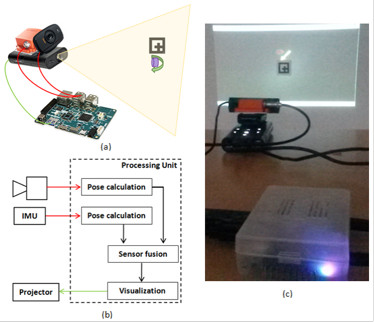

Research Demo 20

Augmented reality for maintenance application on a mobile platform

1Lakshmprabha N.S., 2Panagiotis Mousouliotis, 3Loukas Petrou, 2Stathis Kasderidisk, 1Olga Beltramello

1European Organization for Nuclear Research, CERN

2NOVOCAPTIS

3Aristotle University of Thessaloniki

Abstract: Pose estimation is a major requirement for any augmented reality (AR) application. Cameras and inertial measurement units (IMUs) have been used for pose estimation not only in AR but also in many other fields. The level of accuracy and pose update required in an AR application is more demanding than in any other field. In certain AR applications, (maintenance for example) a small change in pose can cause a huge deviation in the rendering of the virtual content. This misleads the user in terms of an object location and can display incorrect information. Further, the huge amount of processing power required for the camera based pose estimation results in a bulky system. This reduces the mobility and ergonomics of the system. This demonstration shows a fast pose estimation using a camera and an IMU on a mobile platform for augmented reality in a maintenance application.

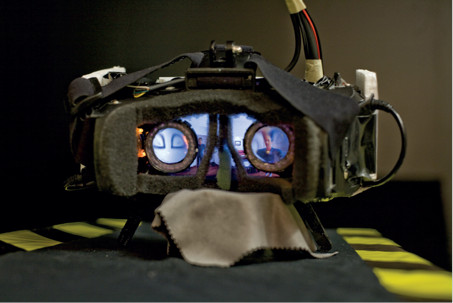

Research Demo 1

Non-Obscuring Binocular Eye Tracking for Wide Field-of-View Head-mounted-Displays

Michael Stengel*, Steve Grogorick*, Martin Eisemann*, Elmar Eisemann+, Marcus Magnor+

*TU Braunschweig, +TU Delft

Abstract: We present a complete hardware and software solution for integrating binocular eye tracking into current state-of-the-art lens-based Head-mounted Displays (HMDs) without affecting the user’s wide field-of-view off the display. The system uses robust and efficient new algorithms for calibration and pupil tracking and allows realtime eye tracking and gaze estimation. Estimating the relative gaze direction of the user opens the door to a much wider spectrum of virtual reality applications and games when using HMDs. We show a 3d-printed prototype of a low-cost HMD with eye tracking that is simple to fabricate and discuss a variety of VR applications utilizing gaze estimation.

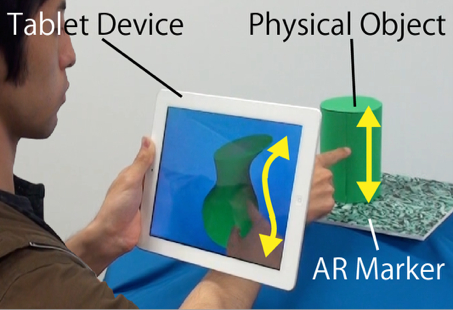

Research Demo 2

Dynamic 3D Interaction using an Optical See-through HMD

Nozomi Sugiura, Takashi Komuro

Saitama University

Abstract: We propose a system that enables dynamic 3D interaction with real and virtual objects using an optical see-through head-mounted display and an RGB-D camera. The virtual objects move according to physical laws. The system uses a physics engine for calculation of the motion of virtual objects and collision detection. In addition, the system performs collision detection between virtual objects and real objects in the three-dimensional scene obtained from the camera which is dynamically updated. A user wears the device and interacts with virtual objects in a seated position. The system gives users a great sense of reality through an interaction with virtual objects.

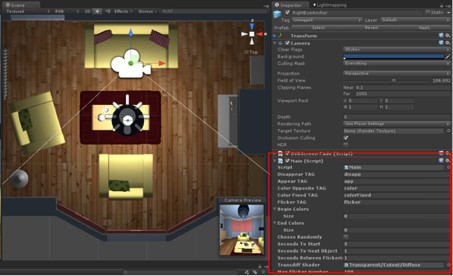

Research Demo 18

Virtual Reality toolbox for experimental psychology – Research Demo

Madis Vasser*, Markus Kängsepp, Kälver Kilvits, Taavi Kivisik, Jaan Aru

University of Tartu

Abstract: We present a general toolbox for virtual reality (VR) research in the field of psychology. Our aim is to simplify the generation and setup of complicated VR scenes for researchers. Various study protocols about perception, attention, cognition and memory can be constructed using our toolbox. Here we specifically showcase a fully functional demo for change blindness phenomena.

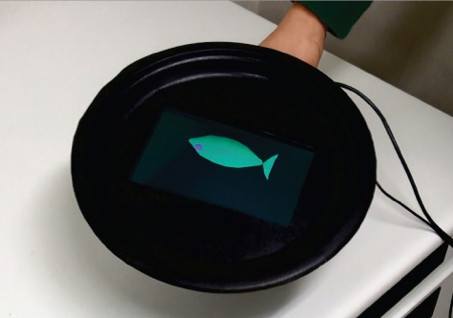

Research Demo 4

Three-dimensional VR Interaction Using the Movement of a Mobile Display

Lili Wang, Takashi Komuro

Saitama University

Abstract: In this study, we propose a VR system for allowing various types of interaction with virtual objects using an autostereoscopic mobile display and an accelerometer. The system obtains the orientation and motion information from the accelerometer attached to the mobile display and reflects them to the motion of virtual objects. It can present 3D images with motion parallax by estimating the position of the user’s viewpoint and by displaying properly projected images. Furthermore, our method enables to connect the real space and the virtual space seamlessly through the mobile display by determining the coordinate system so that one of the horizontal surfaces in the virtual space coincides with the display surface. To show the effectiveness of this concept, we implemented an application to simulate food cooking by regarding the mobile display as a frying pan.