Monday, 21st March 2016

10:15 am – 12:30 pm

Virtual Humans and Crowds

Session Chair: Anne-Hélène Olivier

Effects of virtual human appearance fidelity on emotion contagion in affective inter-personal simulations

TVCG Abstract: Realistic versus stylized depictions of virtual humans in simulated inter-personal situations and their ability to elicit emotional responses in users has been an open question for artists and researchers alike. We empirically evaluated the effects of realistic vs. non-realistic stylized appearance of virtual humans on the emotional response of participants in a medical virtual reality system that was designed to educate users in recognizing the signs and symptoms of patient deterioration. In social emotional constructs of shyness, presence, perceived personality, and enjoyment-joy, we found that participants responded differently in the visually realistic condition as compared to cartoon and sketch conditions.

Abstract: Realistic versus stylized depictions of virtual humans in simulated inter-personal situations and their ability to elicit emotional responses in users has been an open question for artists and researchers alike. We empirically evaluated the effects of realistic vs. non-realistic stylized appearance of virtual humans on the emotional response of participants in a medical virtual reality system that was designed to educate users in recognizing the signs and symptoms of patient deterioration. In social emotional constructs of shyness, presence, perceived personality, and enjoyment-joy, we found that participants responded differently in the visually realistic condition as compared to cartoon and sketch conditions.

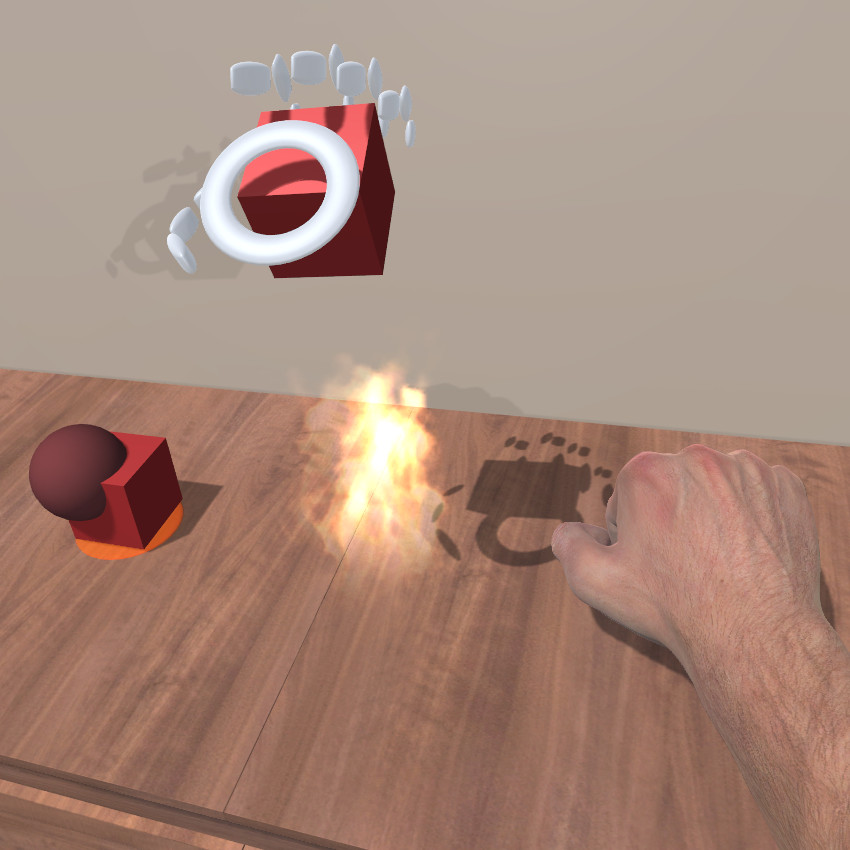

The Role of Interaction in Virtual Embodiment : Effects of the Virtual Hand Representation

IEEE VR proceedingsAbstract: How do people appropriate their virtual hand representation when interacting in virtual environments? In order to answer this question, we conducted an experiment studying the sense of embodiment when interacting with three different virtual hand representations, each one providing a different degree of visual realism but keeping the same control mechanism. The main experimental task was a Pick-and-Place task in which participants had to grasp a virtual cube and place it to an indicated position while avoiding an obstacle (brick, barbed wire or fire). An additional task was considered in which participants had to perform a potentially endangering operation towards their virtual hand: place their virtual hand close to a virtual spinning saw. Both qualitative measures and questionnaire data were gathered in order to assess the sense of agency and ownership towards each virtual hand. Results show that the sense of agency is stronger for less realistic virtual hands which also provide less mismatch between the participant’s actions and the animation of the virtual hand. In contrast, the sense of ownership is increased for the human virtual hand which provides a direct mapping between the degrees of freedom of the real and virtual hand.

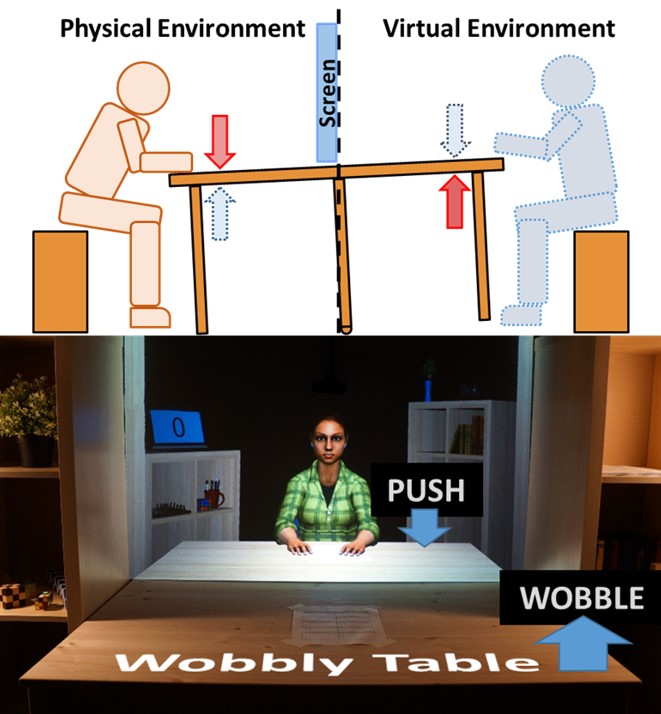

The Wobbly Table: Increased Social Presence via Subtle Incidental Movement of a Real-Virtual Table

IEEE VR proceedingsAbstract: In this paper, we examined the effects of subtle incidental movement of a real-virtual table on presence and social presence. A between-subjects design was used, in which the table wobbled in “wobbly group” while the table was fixed in “control group.” In both groups, participants carried out a conversational task with a virtual human while seated at a table spanning a real-virtual environment. Our study showed the wobbly group exhibited a general increase in presence and social presence, with statistically significant increases in presence, co-presence, and attentional allocation. In addition, participants in the wobbly group showed more affective attraction for the virtual human.

Do Variations in Agency Indirectly Affect Behavior with Others? An Analysis of Gaze Behavior

TVCGAbstract: We consider whether one teammate’s agency can indirectly affect behavior with other teammates, as well as directly with himself. To do so, we examined gaze behavior during a training exercise, in which sixty-nine nurses worked with two teammates. The agency of the two teammates were varied between conditions. Nurses’ gaze behavior was coded using videos of their interactions. Agency was observed to directly affect behavior, such that participants spent more time gazing at virtual teammates than human teammates. However, participants continued to obey polite gaze norms with virtual teammates. In contrast, agency was not observed to indirectly affect gaze behavior.

MMSpace: Kinetically-augmented telepresence for small group-to-group conversations

IEEE VR proceedingsAbstract: A research prototype, MMSpace, was developed for realistic social telepresence in small group-to-group conversations. It consists of kinetic display avatars that can change the screen pose and position by automatically mirroring the remote user’s head motions. The kinetic avatars of MMSpace can produce highly accurate physical motions, by using 4-Degree-of-Freedom actuators. MMSpace supports eye contact between every pair of participants, by integrating multimodal visual attention cues. Subjective evaluations based on group discussions (2 x 2 setting) indicate that the kinetic display avatar is superior to static displays in gaze-awareness, eye-contact, perception of nonverbal behaviors, mutual understanding, and sense of telepresence.

Interactive and Adaptive Data-Driven Crowd Simulation

IEEE VR proceedingsAbstract: We present an adaptive data-driven algorithm for interactive crowd simulation. Our approach combines realistic trajectory behaviors extracted from videos with synthetic multi-agent algorithms to generate plausible simulations. We use statistical techniques to compute the movement patterns and motion dynamics from noisy 2D trajectories extracted from crowd videos. These learned pedestrian dynamic characteristics are used to generate collision-free trajectories of virtual pedestrians in slightly different environments or situations. The overall approach is robust and can generate perceptually realistic crowd movements at interactive rates in dynamic environments. We also present results from preliminary user studies that evaluate the result of our algorithm.

3:30 pm – 5:00 pm

Ears and Hands

Session Chair: Sho Sakurai

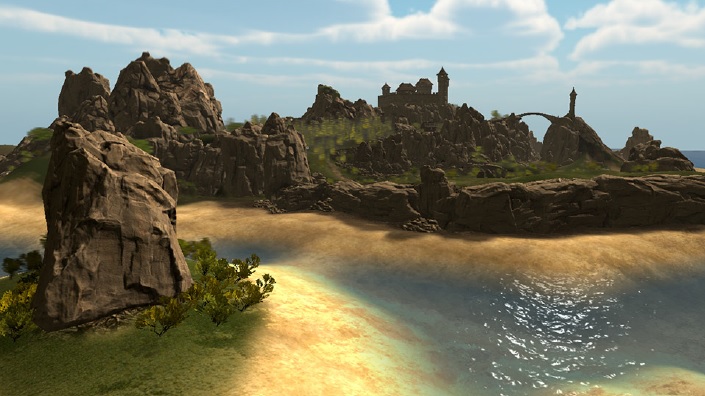

Interactive Coupled Sound Synthesis-Propagation using Single Point Multipole Expansion

TVCG Abstract: Recent research in sound simulation has focused on either sound synthesis or sound propagation, and many standalone algorithms have been developed for each domain.

We present a novel technique for coupling sound synthesis with sound propagation to automatically generate realistic aural content for virtual environments.

Our approach can generate sounds from rigid-bodies based on the vibration modes and radiation coefficients represented by the single-point multipole expansion. We present a mode-adaptive propagation algorithm that uses a perceptual Hankel function approximation technique to achieve interactive runtime performance.

The overall approach allows for high degrees of dynamism – it can support dynamic sources, dynamic listeners, and dynamic directivity simultaneously.

We have integrated our system with the Unity game engine and demonstrate the effectiveness of this fully-automatic technique for audio content creation in complex indoor and outdoor scenes

Abstract: Recent research in sound simulation has focused on either sound synthesis or sound propagation, and many standalone algorithms have been developed for each domain.

We present a novel technique for coupling sound synthesis with sound propagation to automatically generate realistic aural content for virtual environments.

Our approach can generate sounds from rigid-bodies based on the vibration modes and radiation coefficients represented by the single-point multipole expansion. We present a mode-adaptive propagation algorithm that uses a perceptual Hankel function approximation technique to achieve interactive runtime performance.

The overall approach allows for high degrees of dynamism – it can support dynamic sources, dynamic listeners, and dynamic directivity simultaneously.

We have integrated our system with the Unity game engine and demonstrate the effectiveness of this fully-automatic technique for audio content creation in complex indoor and outdoor scenes

Efficient HRTF-based Spatial Audio for Area and Volumetric Sources

TVCGAbstract: We present a novel spatial audio rendering technique to handle sound sources that can be represented by either an area or a volume in VR environments. As opposed to point-sampled sound sources, our approach projects the area-volumetric source to the spherical domain centered at the listener and represents this projection area compactly using the spherical harmonic (SH) basis functions. By representing the head-related transfer function (HRTF) in the same basis, we demonstrate that spatial audio which corresponds to an area-volumetric source can be efficiently computed as a dot product of the SH coefficients of the projection area and the HRTF. This results in an efficient technique whose computational complexity and memory requirements are independent of the complexity of the sound source. Our approach can support dynamic area-volumetric sound sources at interactive rates. We evaluate the performance of our technique in large complex VR environments and demonstrate significant improvement over the na¨ıve point-sampling technique. We also present results of a user evaluation, conducted to quantify the subjective preference of the user for our approach over the point-sampling approach in VR environments.

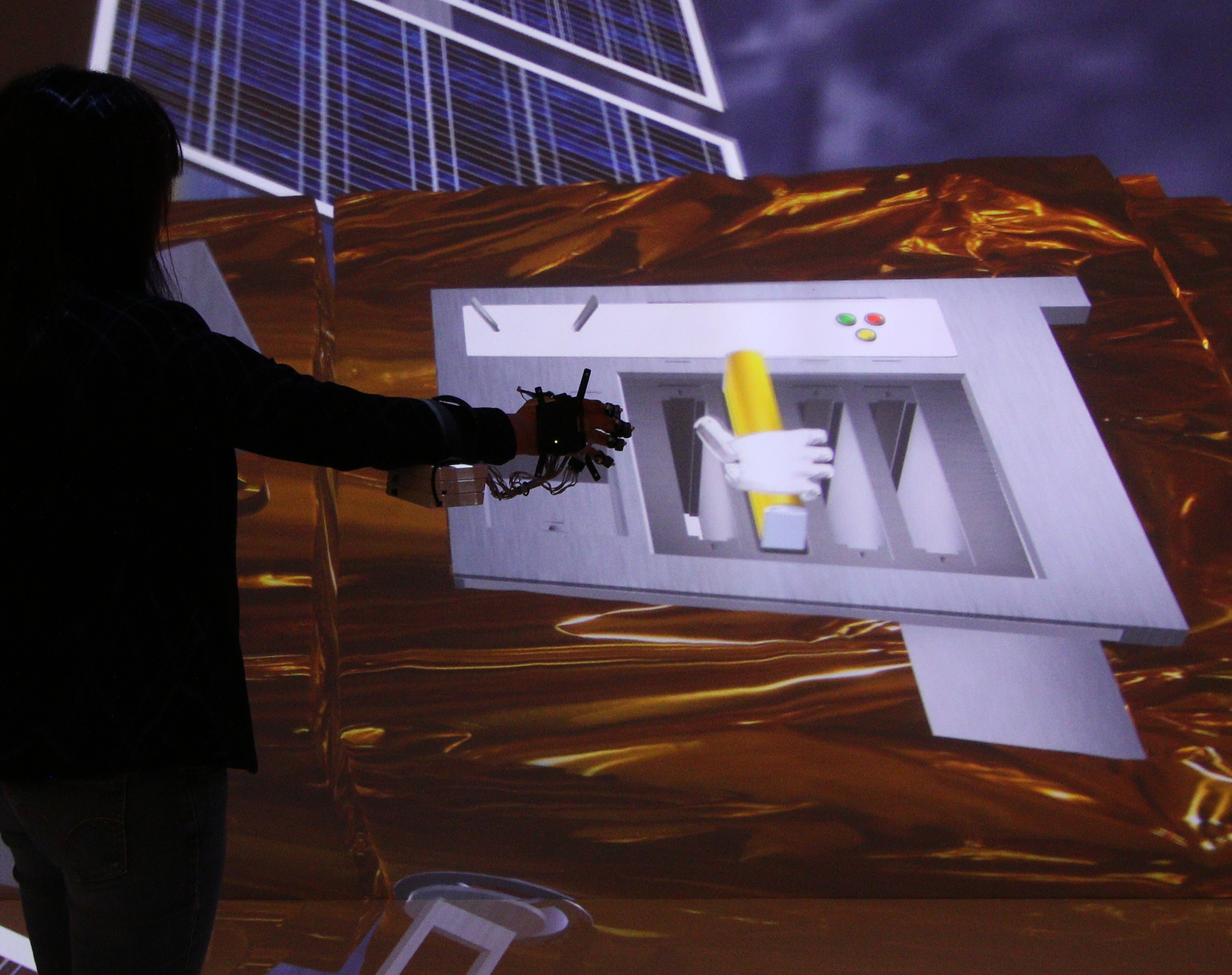

A Lightweight Electrotactile Feedback Device to Improve Grasping in Immersive Virtual Environments

IEEE VR proceedingsAbstract: An immersive virtual environment is the ideal platform for the planning and training of on-orbit servicing missions. In such kind of virtual assembly simulation, grasping virtual objects is one of the most common and natural interactions. In this paper, we present a novel, small and lightweight electrotactile feedback device, specifically designed for immersive virtual environments. We conducted a study to assess the feasibility and usability of our interaction device. Results show that electrotactile feedback improved the user’s grasping in our virtual on-orbit servicing scenario. The task completion time was significantly lower and the precision of the user’s interaction was higher.

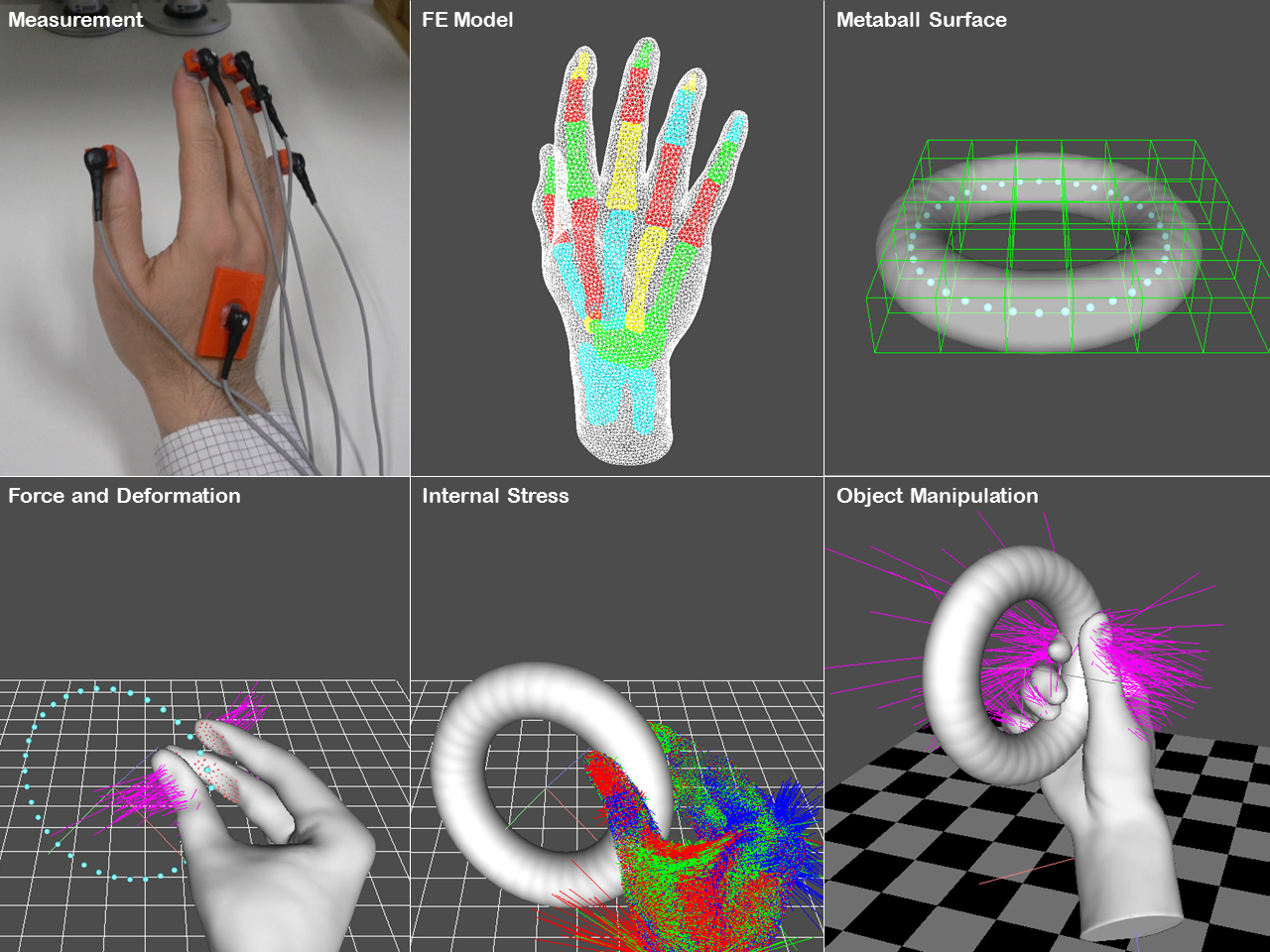

Interaction with Virtual Object using Deformable Hand

IEEE VR proceedingsAbstract: This study investigated the implementation of a hand model and contact simulation method. The proposed method seeks hand form that minimizes the position and orientation errors on those areas. Deformation of the soft tissue of the hand was simulated by FEM, the friction of contact was introduced by the penalty method, and a model that is based on metaballs (or blobs) was employed to represent the smooth surface of the object. Through experimental implementation, it was proved that object manipulation such as pinching and grasping are possible and that the update rate of simulation can be approximately 50 Hz.

3:30 pm – 5:00 pm

IEEE Computer Graphics & Applications

Spatial User Interfaces for Large-Scale Projector-Based Augmented Reality

Abstract: Spatial augmented reality applies the concepts of spatial user interfaces to large-scale, projector-based augmented reality. Such virtual environments have interesting characteristics. They deal with large physical objects, the projection surfaces are nonplanar, the physical objects provide natural passive haptic feedback, and the systems naturally support collaboration between users. The article describes how these features affect the design of spatial user interfaces for these environments and explores promising research directions and application domains.The Reality Deck–an Immersive Gigapixel Display

Abstract: The Reality Deck is a visualization facility offering state-of-the-art aggregate resolution and immersion. Its a 1.5-Gpixel immersive tiled display with a full 360-degree horizontal field of view. Comprising 416 high-density LED-backlit LCD displays, it visualizes gigapixel-resolution data while providing 20/20 visual acuity for most of the visualization space.Tuesday, 22nd March 2016

8:30 am – 10:00 am

System and Latency

Session Chair: Eric Ragan

From Motion to Photons in 80 Microseconds: Towards Minimal Latency for Virtual and Augmented Reality

TVCGAbstract: We describe an AR, see-through, DMD display with an extremely fast (16kHz) update rate, combining post-rendering 2-D offsets and just-in-time tracking updates with a novel modulation technique for turning binary pixels into grayscale. These processing elements are mounted along with the optical display elements in a head-tracked rig where users view synthetic imagery superimposed on their real environment. Combining near-zero-latency mechanical tracking with FPGA display processing gives us a measured average of 80µs of end-to-end latency and a versatile test platform for extremely-low-latency display systems. We have examined the trade-offs between image quality and complexity and have maintained quality with this simple display modulation.

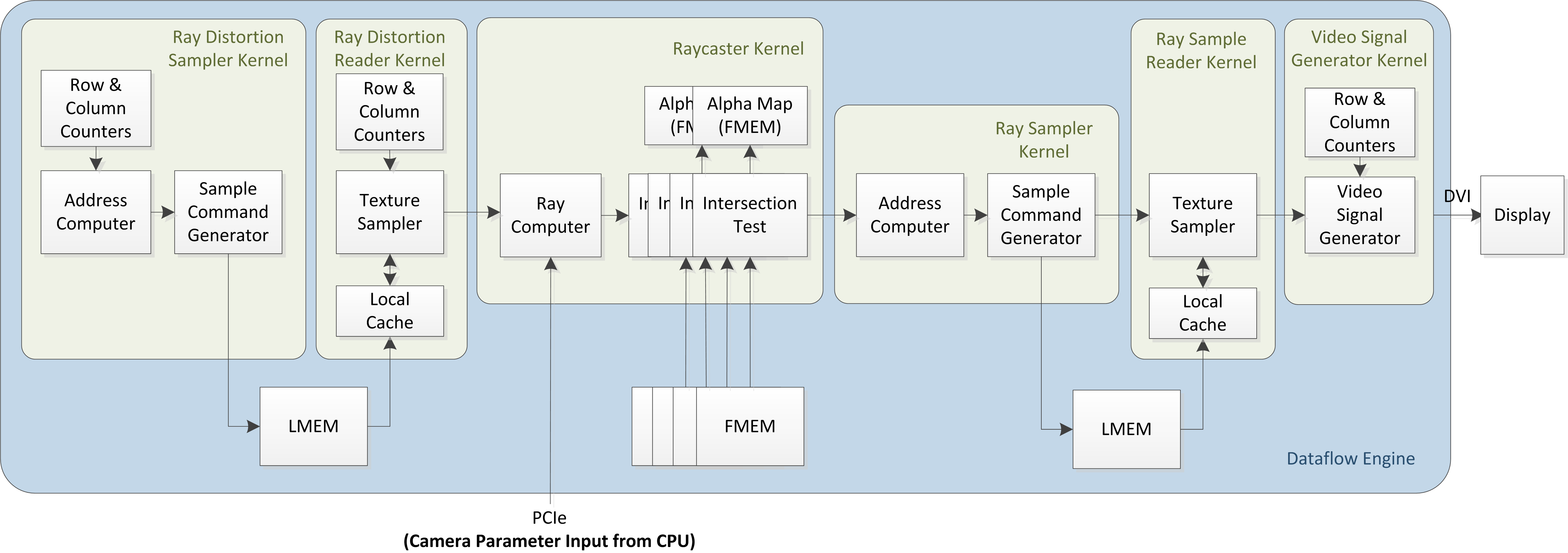

Construction and Evaluation of an Ultra Low Latency Frameless Renderer for VR

TVCG Abstract: Latency is detrimental to VR. Typically considered to be discrete in time and space, in practice it changes across the display – and how it does so depends on the rendering approach used. We present an ultra-low latency realtime ray-caster: a frameless renderer with a distinct latency profile to that of a traditional frame-based system. We examine its performance when driving an Oculus DK2, comparing it with a GPU based system. We find that ours, with a lower latency, has higher fidelity under user motion, and that low display persistence reduces the sensitivity to velocity of both systems.

Abstract: Latency is detrimental to VR. Typically considered to be discrete in time and space, in practice it changes across the display – and how it does so depends on the rendering approach used. We present an ultra-low latency realtime ray-caster: a frameless renderer with a distinct latency profile to that of a traditional frame-based system. We examine its performance when driving an Oculus DK2, comparing it with a GPU based system. We find that ours, with a lower latency, has higher fidelity under user motion, and that low display persistence reduces the sensitivity to velocity of both systems.

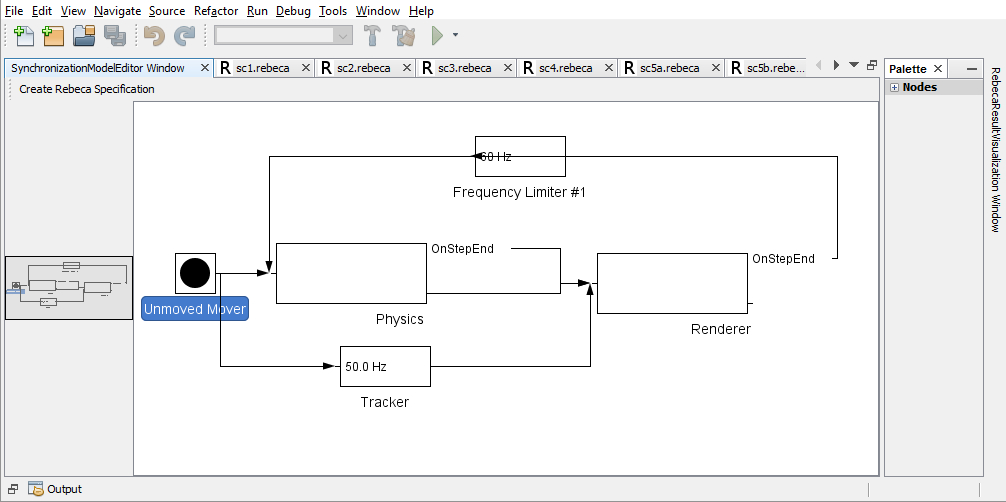

Estimating latency and concurrency of Asynchronous Real-Time Interactive Systems using Model Checking

IEEE VR proceedings Abstract: This article introduces model checking as an alternative method to estimate the latency and parallelism of asynchronous Realtime Interactive Systems (RISs). Five typical concurrency and synchronization schemes often found in concurrent Virtual Reality systems are identified as use-cases. Several model-checking tools are evaluated against typical requirements in the RIS area. The formal language Rebeca and its model checker RMC are applied to the specification of the use-cases to estimate latency and parallelism for each case. The estimations are compared to measured results achieved by classical profiling.

Abstract: This article introduces model checking as an alternative method to estimate the latency and parallelism of asynchronous Realtime Interactive Systems (RISs). Five typical concurrency and synchronization schemes often found in concurrent Virtual Reality systems are identified as use-cases. Several model-checking tools are evaluated against typical requirements in the RIS area. The formal language Rebeca and its model checker RMC are applied to the specification of the use-cases to estimate latency and parallelism for each case. The estimations are compared to measured results achieved by classical profiling.

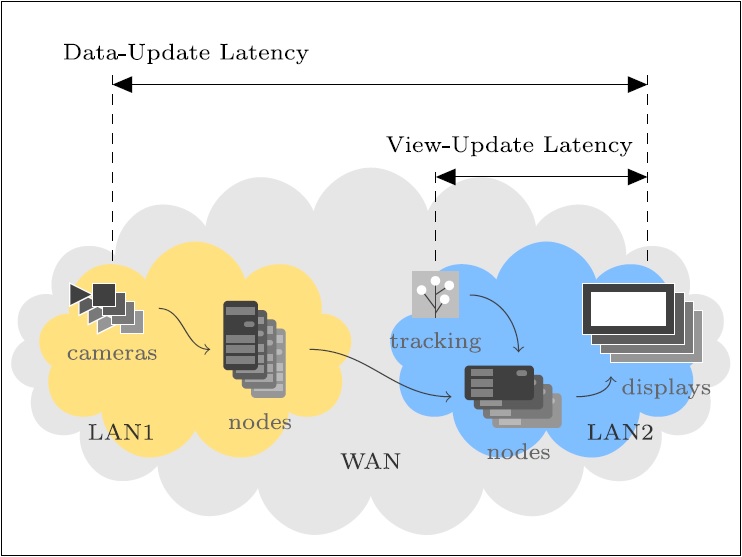

Latency in Distributed Acquisition and Rendering for Telepresence Systems

TVCG PresentationAbstract: —Telepresence systems use 3D techniques to create a more natural human-centered communication over long distances. This work concentrates on the analysis of latency in telepresence systems where acquisition and rendering are distributed. Keeping latency low is important to immerse users in the virtual environment. To better understand latency problems and to identify the source of such latency, we focus on the decomposition of system latency into sub-latencies. We contribute a model of latency and show how it can be used to estimate latencies in a complex telepresence dataflow network. To compare the estimates with real latencies in our prototype, we modify two common latency measurement methods. This presented methodology enables the developer to optimize the design, find implementation issues and gain deeper knowledge about specific sources of latency.

10:15 am – 12:30 pm

Perception and Cognition

Session Chair: Tabitha Peck

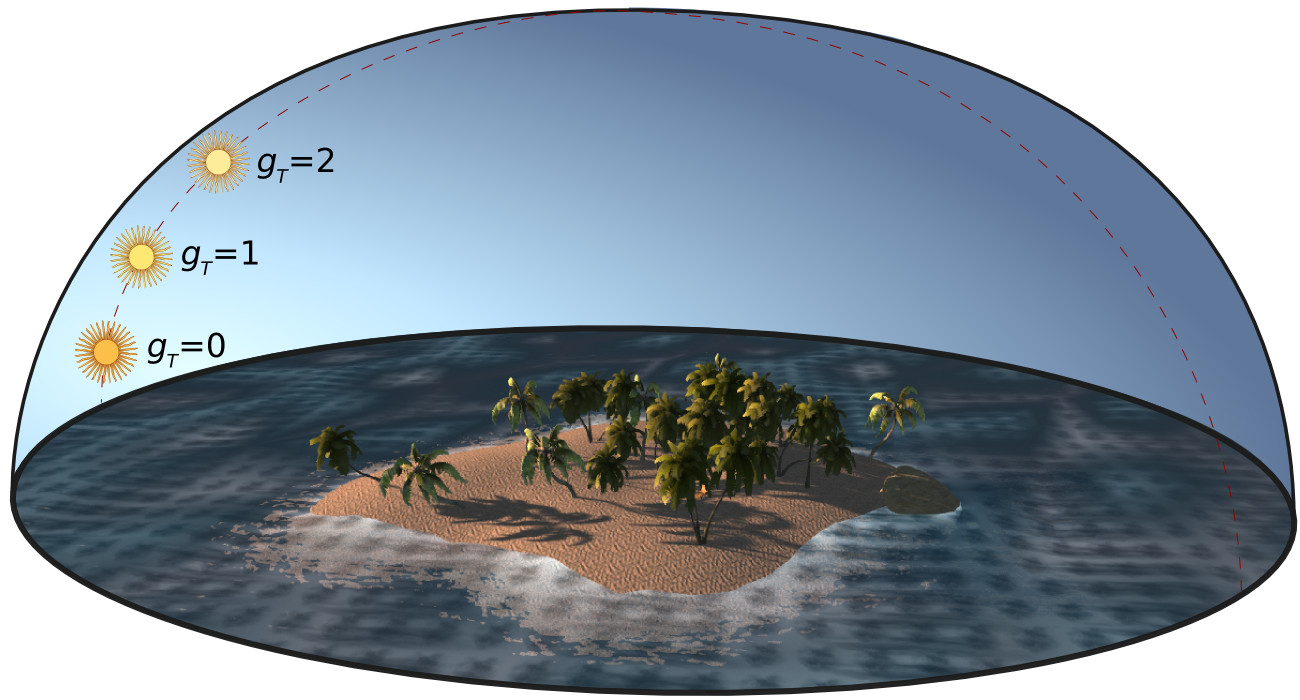

Who turned the clock? Effects of Manipulated Zeitgebers, Cognitive Load and Immersion on Time Estimation

TVCGAbstract: In this article we explore the effects of manipulated zeitgebers, cognitive load and immersion on time estimation as yet unexplored factors of spatiotemporal perception in virtual environments. We present an experiment in which we analyze human sensitivity to temporal durations with an HMD. We found that manipulations of external zeitgebers caused by a natural or unnatural movement of the virtual sun had a significant effect on time judgments. Moreover, using the dual-task paradigm the results show that increased spatial and verbal cognitive load resulted in a significant shortening of judged time as well as an interaction with the external zeitgebers.

Augmented Reality as a Countermeasure for Sleep Deprivation

TVCGAbstract: Sleep deprivation is known to have serious deleterious effects on executive functioning and job performance. Augmented reality has an ability to place pertinent information at the fore, guiding visual focus and reducing instructional complexity. This paper presents a study to explore how spatial augmented reality instructions impact procedural task performance on sleep deprived users. The user study was conducted to examine performance on a procedural task at six time points over the course of a night of total sleep deprivation. Tasks were provided either by spatial augmented reality-based projections or on an adjacent monitor. The results indicate that participant errors significantly increased with the monitor condition when sleep deprived. The augmented reality condition exhibited a positive influence with participant errors and completion time having no significant increase when sleep deprived. The results of our study show that spatial augmented reality is an effective sleep deprivation countermeasure under laboratory conditions.

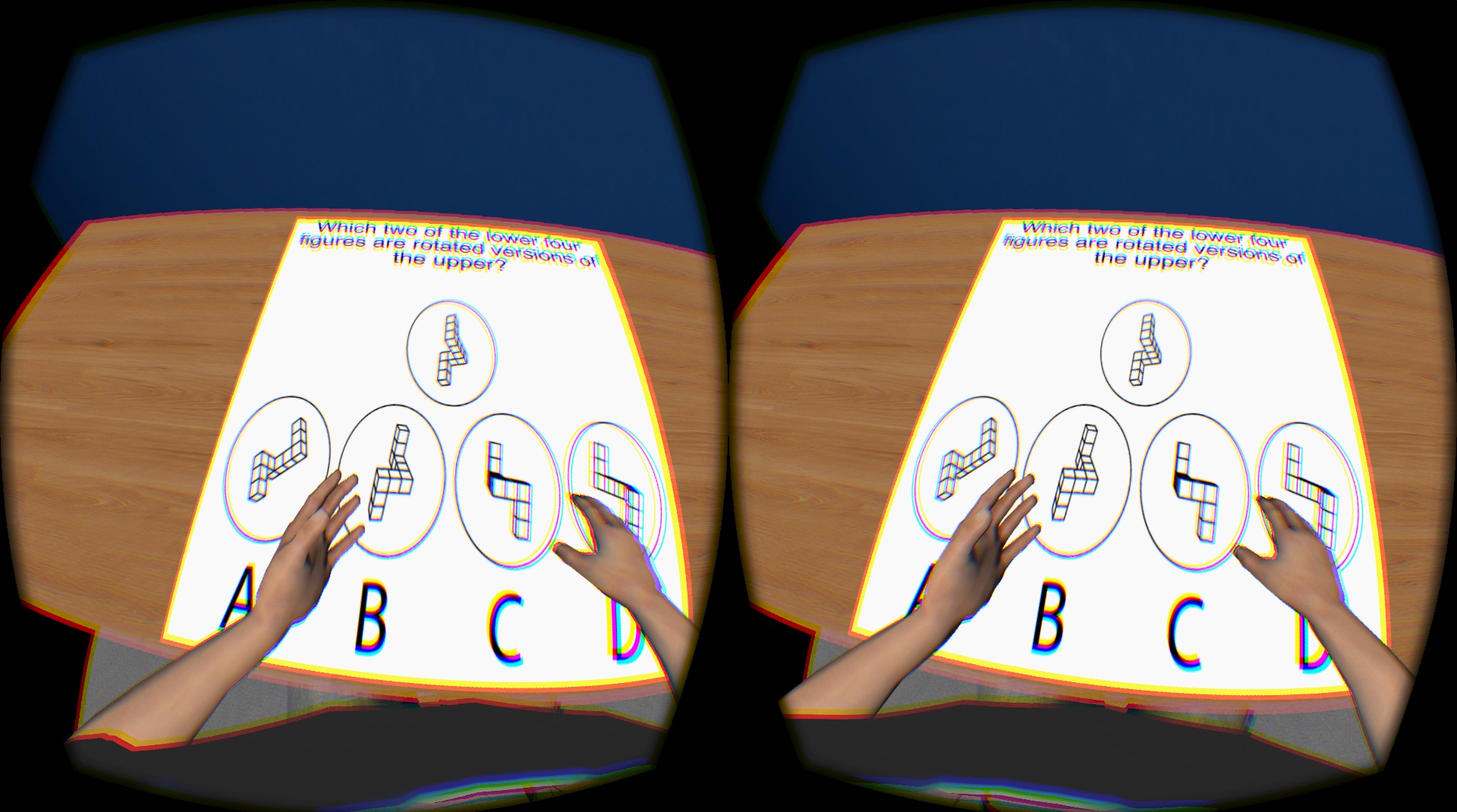

The Impact of a Self-Avatar on Cognitive Ability in Immersive Virtual Reality

IEEE VR proceedingsAbstract: We demonstrate that a self-avatar may aid the participant’s cognitive processes while immersed in a virtual reality system. Participants were asked to memorise pairs of letters, perform a spatial rotation exercise and then recall the pairs of letters. In a between-subject factor they either had an avatar or not, and in a within-subject factor they were instructed to keep their hands still or not. We found that participants who both had an avatar and were allowed to move their hands had significantly higher letter pair recall. There was no significant difference between the other three conditions.

An `In the Wild’ Experiment on Presence and Embodiment using Consumer Virtual Reality Equipment

TVCGAbstract: We report on a study on presence and embodiment within virtual reality that was conducted `in the wild’. Users of Samsung Gear VR and Google Cardboard ran an app that presented a scenario where the participant would sit in a bar watching a singer. Despite the uncontrolled situation of the experiment, results from an in-app questionnaire showed tentative evidence that a self-avatar had a positive effect on self-report of presence and embodiment, and that having the singer invite the participant to tap along had a negative effect on self-report of embodiment.

Testing intuitive decision-making in VR: personality traits predict decisions in an accident situation

IEEE VR proceedingsAbstract: Our study used virtual reality to investigate intuitive decision-making under time pressure. During an immersive racing game, participants were suddenly confronted with pedestrians appearing on the course. We observed three different reactions to this accident situation: group 1 did not brake, group 2 braked, and group 3 tried to avoid the pedestrians. Importantly, we found from personality surveys that the no-brake group had lower scores in perspective-taking and higher scores in psychopathy compared to the other groups. Our results demonstrate that personality differences are able to predict intuitive decision-making and that such processes can be studied in VR simulations.

Head tracking Latency in Virtual Environments Revisited: Do users with multiple sclerosis notice latency less?

TVCG PresentationAbstract: Latency (i.e., time delay) in a Virtual Environment is known to disrupt user performance, presence and induce simulator sickness. Thus, with emerging use of Virtual Rehabilitation, the target populations’ latency perception thresholds need to be considered to fully understand and possibly control the implications of latency in a Virtual Rehabilitation environment. We present a study that quantifies the latency discrimination thresholds of a yet untested population – a specific subset of mobility impaired participants where participants suffer from Multiple Sclerosis – and compare the results to a control group of healthy participants. The study was modeled after previous latency discrimination research and shows significant differences in latency perception between the two populations with MS participants showing lower sensitivity to latency than healthy participants.

1:45 pm – 3:15 pm

Augmented Reality and Calibration

Session Chair: Daisuke Iwai

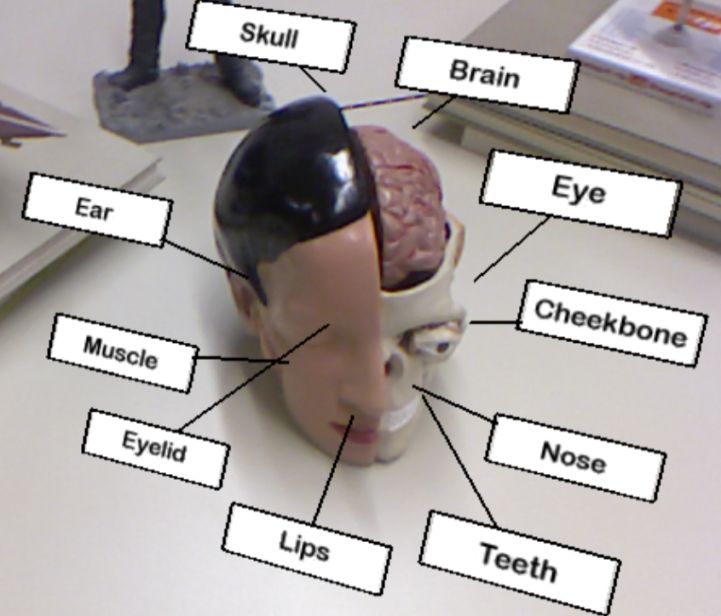

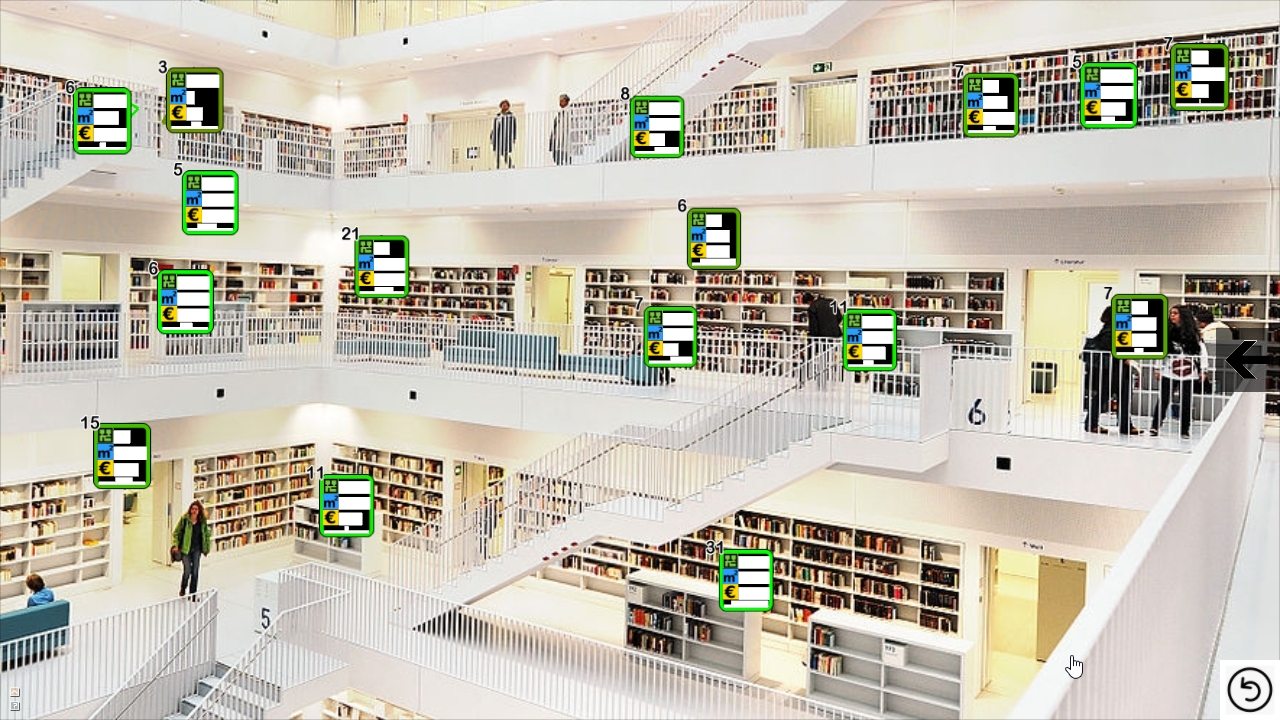

Temporal Coherence Strategies for Augmented Reality Labeling

TVCGAbstract: Temporal coherence of annotations is an important factor in augmented reality user interfaces and for information visualization. We empirically evaluate four different techniques for annotation. Based on these findings, we follow up with subjective evaluations in a second experiment. Results show that presenting annotations in object space or image space leads to a significant difference in task performance. Furthermore, there is a significant interaction between rendering space and update frequency of annotations. Participants improve significantly in locating annotations, when annotations are presented in object space, and view management update rate is limited. In a follow-up experiment, participants appear to be more satisfied with limited update rate in comparison to a continuous update rate of the view management system.

Adaptive Information Density for Augmented Reality Displays

IEEE VR proceedingsAbstract: Augmented Reality (AR) browsers show geo-referenced data in the current view of a user. When the amount of data grows too large, the display quickly becomes cluttered. We present an adaptive information density display for AR that balances the amount of presented information against the potential clutter created by placing items on the screen. We use hierarchical clustering to create a level-of-detail structure, in which nodes closer to the root encompass groups of items, while the leaf nodes contain single items. Users preferred our interface, because it provided a better overview of the data and allowed for easier comparison. We evaluated the effect of different degrees of clustering on search and recall tasks. Users generally made fewer errors, when using our interface for a search task, which indicates that the reduced clutter allowed them to stay focused on finding the relevant items.

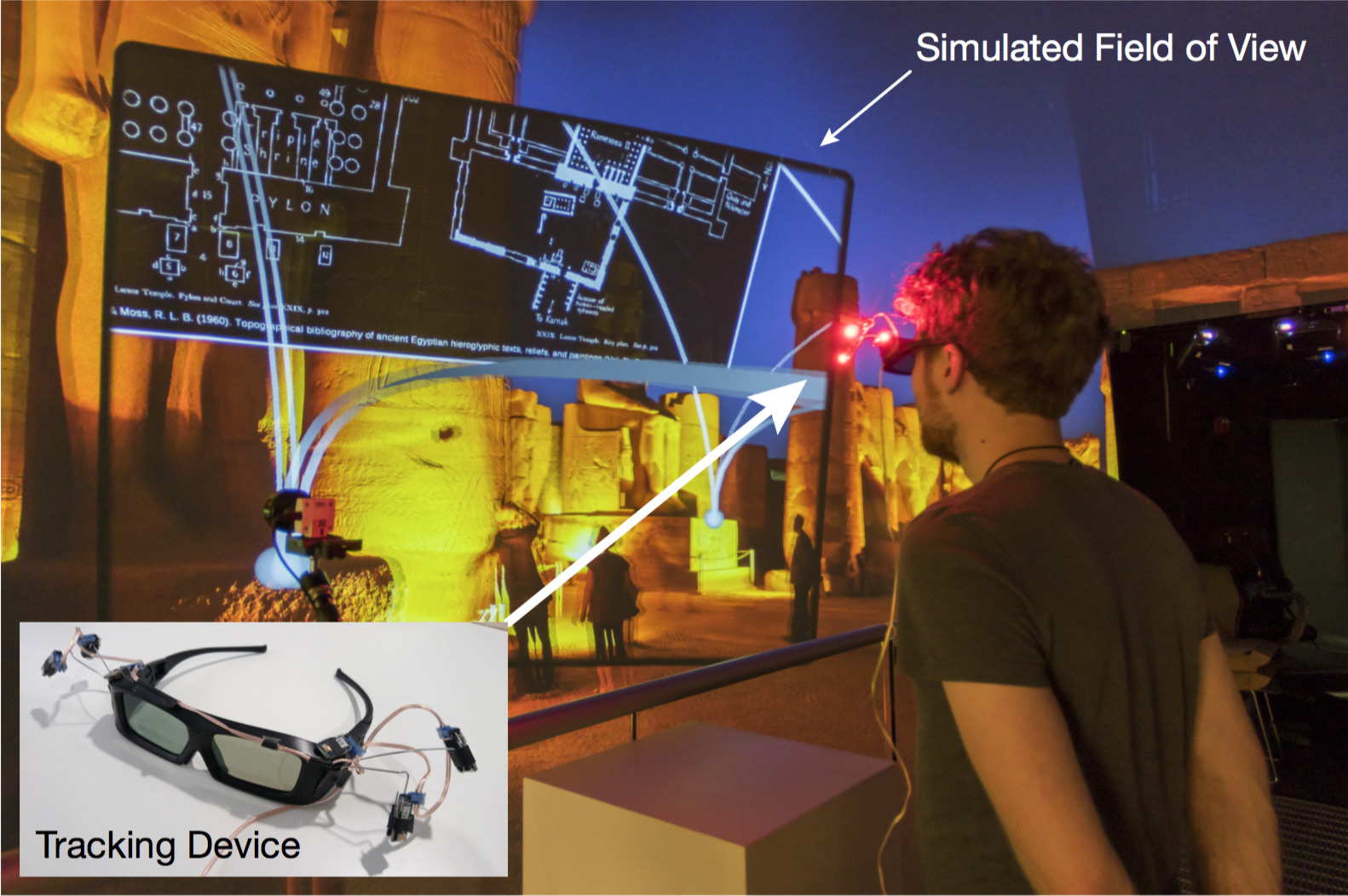

Evaluating Wide-Field-of-View Augmented Reality with Mixed Reality Simulation

IEEE VR proceedingsAbstract: Full-surround AR is an under explored-topic. Anticipating a change in AR capabilities, we experiment with wide-field-of-view annotations that link elements far apart in the visual field. We have built a system that simulates AR with different capacities. We conducted a study comparing user performance on five different task groups within an information-seeking scenario, comparing two different fields of view and presence and absence of tracking artifacts. A constrained field of view significantly increased task completion time. We found indications for task time effects of tracking artifacts to vary depending on age.

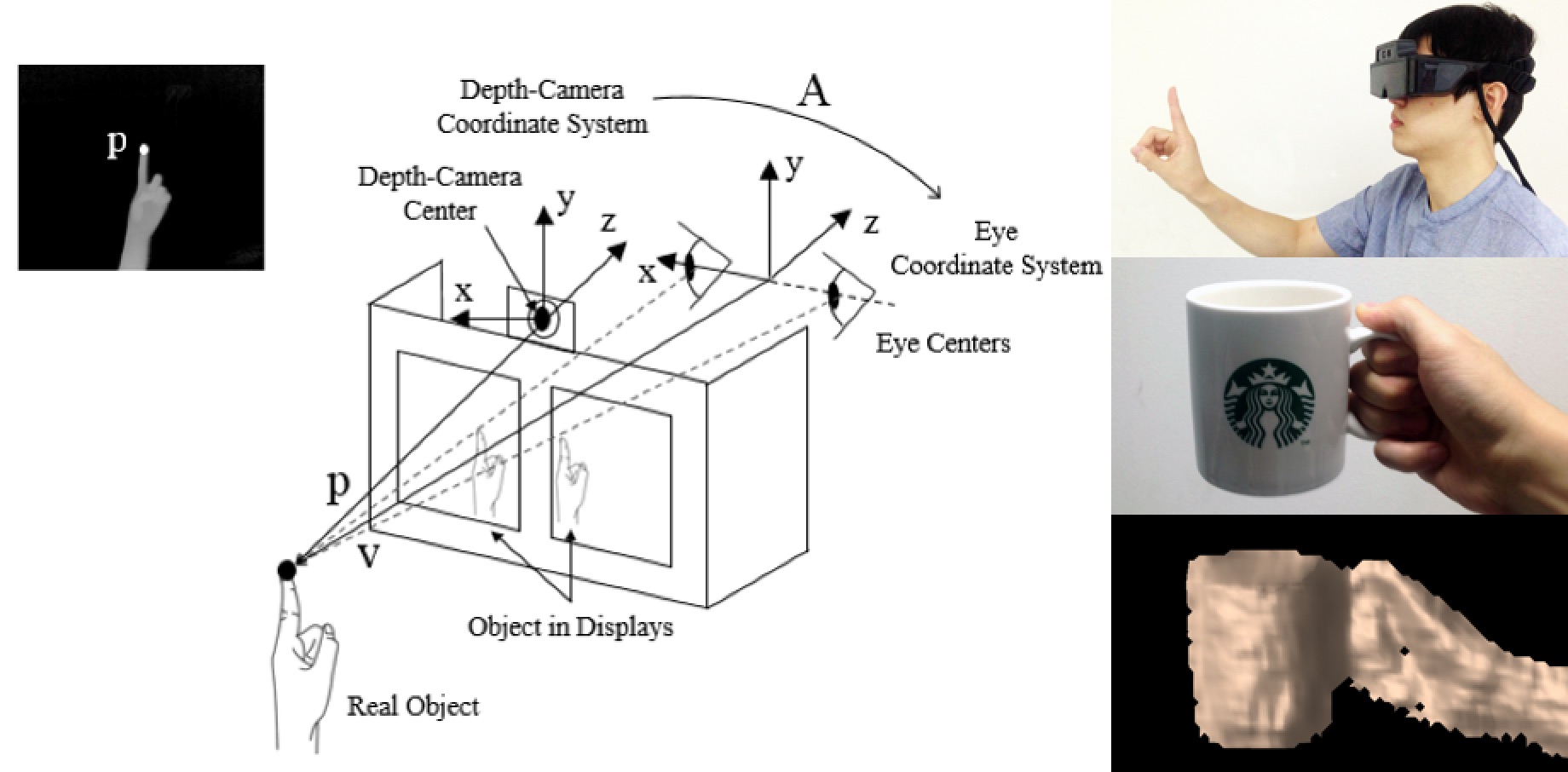

A Calibration Method for Optical See-through Head-mounted Displays with a Depth Camera

IEEE VR proceedingsAbstract: We propose a fast and accurate calibration method for the optical see-through head-mounted displays (OST-HMD), taking advantage of affordable OST-HMDs with depth cameras that are widely appearing in the commercial market. In order to correctly reflect the user experience into the calibration process, our method demands a user wearing the HMD to repeatedly point at rendered virtual circles with their fingertips. From the repeated calibration data, we perform two stages of full calibration and simplified calibration. Our experimental results show that the full and simplified calibration can be achieved with 10 and 5 user’s repetitions.

3:30 pm – 5:00 pm

Displays and Sensory Integration

Session Chair: Gerd Bruder

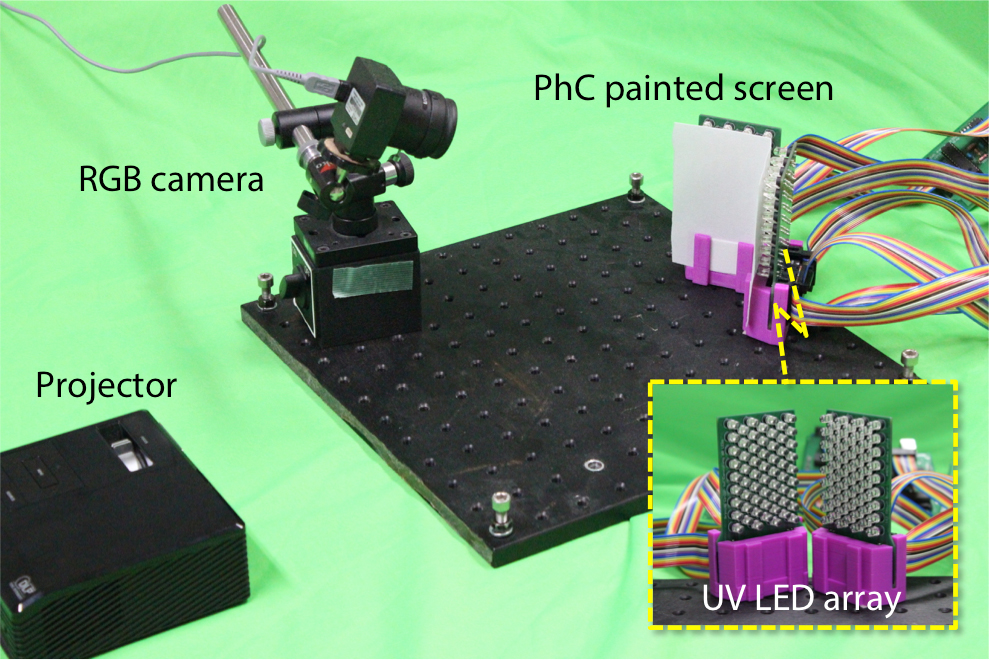

Inter-reflection Compensation of Immersive Projection Display by Spatio-Temporal Screen Reflectance Modulation

TVCGAbstract: We propose a novel inter-reflection compensation technique for immersive projection displays wherein we spatially modulate the reflectance pattern on the screen to improve the compensation performance of conventional methods. We realize spatial reflectance modulation by painting it with a photochromic compound, which changes its color when ultraviolet (UV) light is applied and by controlling UV irradiation with a UV LED array placed behind the screen. The main contribution is a computational model to optimize a reflectance pattern for the accurate reproduction of a target appearance by decreasing the inter-reflection effects. Through simulation and physical experiments, we demonstrate and confirm the proposal’s advantage over conventional methods.

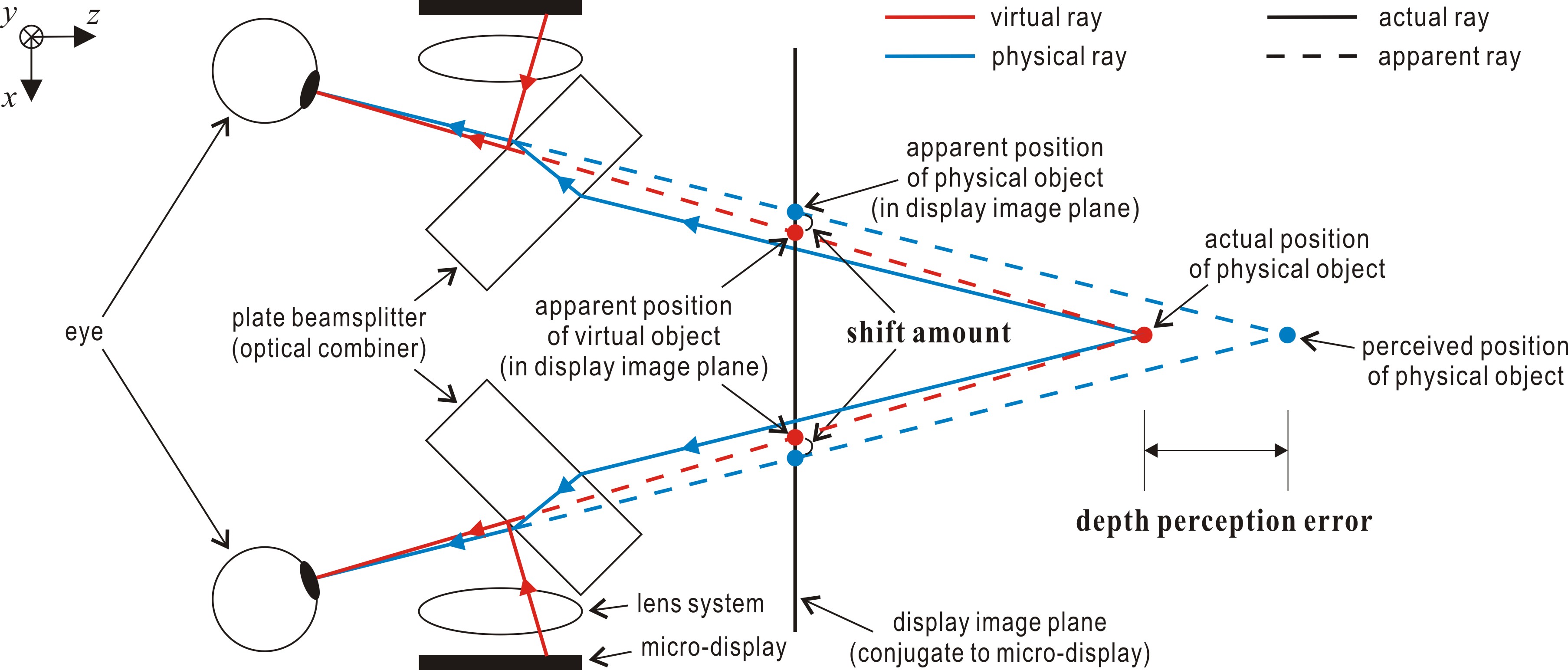

Effects of Configuration of Optical Combiner on Near-Field Depth Perception in Optical See-Through Head-Mounted Displays

TVCGAbstract: Ray-shift phenomenon means apparent distance shift in display image plane between virtual and physical objects. It is caused by difference in refraction of virtual display and see-through optical paths induced by optical combiners in optical see-through head-mounted displays. Through an experiment, we investigated effects of ray-shift phenomenon on depth perception for near-field distances in various configurations of optical combiner. According to experimental results, measured depth perception errors were similar to estimated ones, where |estimated percentage error| > 0.3%. Participants showed significantly larger depth perception errors in the horizontal-tilt configuration than in an ordinary condition, but not in the vertical-tilt configuration.

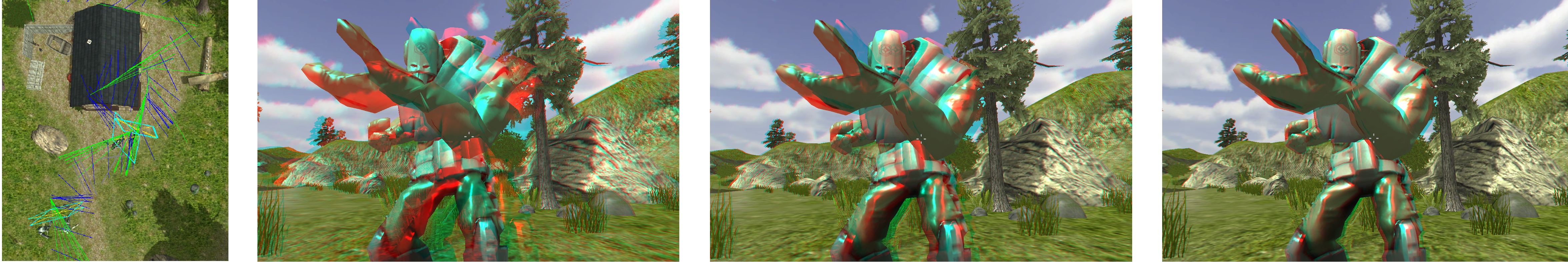

Gaze Prediction using Machine Learning for Dynamic Stereo Manipulation in Games

IEEE VR proceedings Abstract: Comfortable, high-quality 3D stereo viewing is becoming a requirement for interactive applications. Previous research shows that manipulating disparity can alleviate some of the discomfort caused by 3D stereo, but it is best to do this around the object the user is gazing at. Player actions are highly correlated with the present state of a game, encoded by game variables. We train a classifier to learn these correlations

using an eye-tracker. The classifier is used at runtime to predict gaze during game play. We use this prediction to propose a dynamic disparity manipulation method, providing rich and comfortable depth.

Abstract: Comfortable, high-quality 3D stereo viewing is becoming a requirement for interactive applications. Previous research shows that manipulating disparity can alleviate some of the discomfort caused by 3D stereo, but it is best to do this around the object the user is gazing at. Player actions are highly correlated with the present state of a game, encoded by game variables. We train a classifier to learn these correlations

using an eye-tracker. The classifier is used at runtime to predict gaze during game play. We use this prediction to propose a dynamic disparity manipulation method, providing rich and comfortable depth.

Motion Effects Synthesis for 4D Films

TVCG PresentationAbstract: 4D film is an immersive entertainment system that presents various physical effects with a film in order to enhance viewers’ experiences. Despite the recent emergence of 4D theaters, production of 4D effects relies on manual authoring. In this paper, we present algorithms that synthesize three classes of motion effects from the audiovisual content of a film. The first class of motion effects is those responding to fast camera motion to enhance the immersiveness of point-of-view shots, delivering fast and dynamic vestibular feedback. The second class moves viewers as closely as possible to the trajectory of slowly moving camera. Such motion provides an illusional effect of observing the scene from a distance while moving slowly within the scene. For these two classes, our algorithms compute the relative camera motion and then map it to a motion command to the 4D chair using appropriate motion mapping algorithms. The last class is for special effects, such as explosions, and our algorithm uses sound for the synthesis of impulses and vibrations. We assessed the subjective quality of our algorithms by user experiments, and results indicated that our algorithms can provide compelling motion effects.

Wednesday, 23rd March 2016

10:00 am – 12:30 pm

Interaction and Immersion

Session Chair: Alexander Kulik

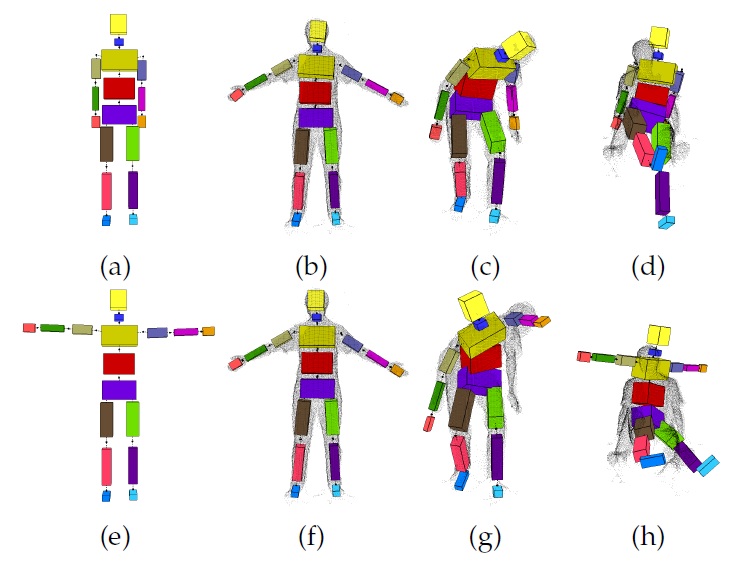

Motion Capture with Ellipsoidal Skeleton using Multiple Depth Cameras

TVCG PresentationAbstract: This paper introduces a novel motion capturing framework which works by minimizing the fitting error between an ellipsoid based skeleton and the input point cloud data captured by multiple depth cameras. The novelty of this method comes from that it uses the ellipsoids equipped with the spherical harmonics encoded displacement and normal functions to capture the geometry details of the tracked object. This method is also integrated with a mechanism to avoid collisions of bones during the motion capturing process. The method is implemented parallelly with CUDA on GPU and has a fast running speed without dedicated code optimization. The errors of the proposed method on the data from Berkeley Multimodal Human Action Database (MHAD) are within a reasonable range compared with the ground truth results. Our experiment shows that this method succeeds on many challenging motions which are failed to be reported by Microsoft Kinect SDK and not tested by existing works. In the comparison with the state-of-art marker-less depth camera based motion tracking work our method shows advantages in both robustness and input data modality.

Lift-Off: Using Reference Imagery and Freehand Sketching to Create 3D Models in VR

TVCGAbstract: Current VR-based 3D modeling tools suffer from two problems that limit creativity and applicability: (1) the lack of control for freehand modeling, and (2) the difficulty of starting from scratch. To address these challenges, we present Lift-Off, an immersive 3D interface for creating complex models with a controlled, handcrafted style. Artists start with 2D sketches, which are then positioned in VR. 2D curves within the sketches are selected interactively and “lifted” into space to create a 3D scaffolding. Finally, artists sweep surfaces along these curves to create 3D models. Evaluations are presented for both long-term users and for novices.

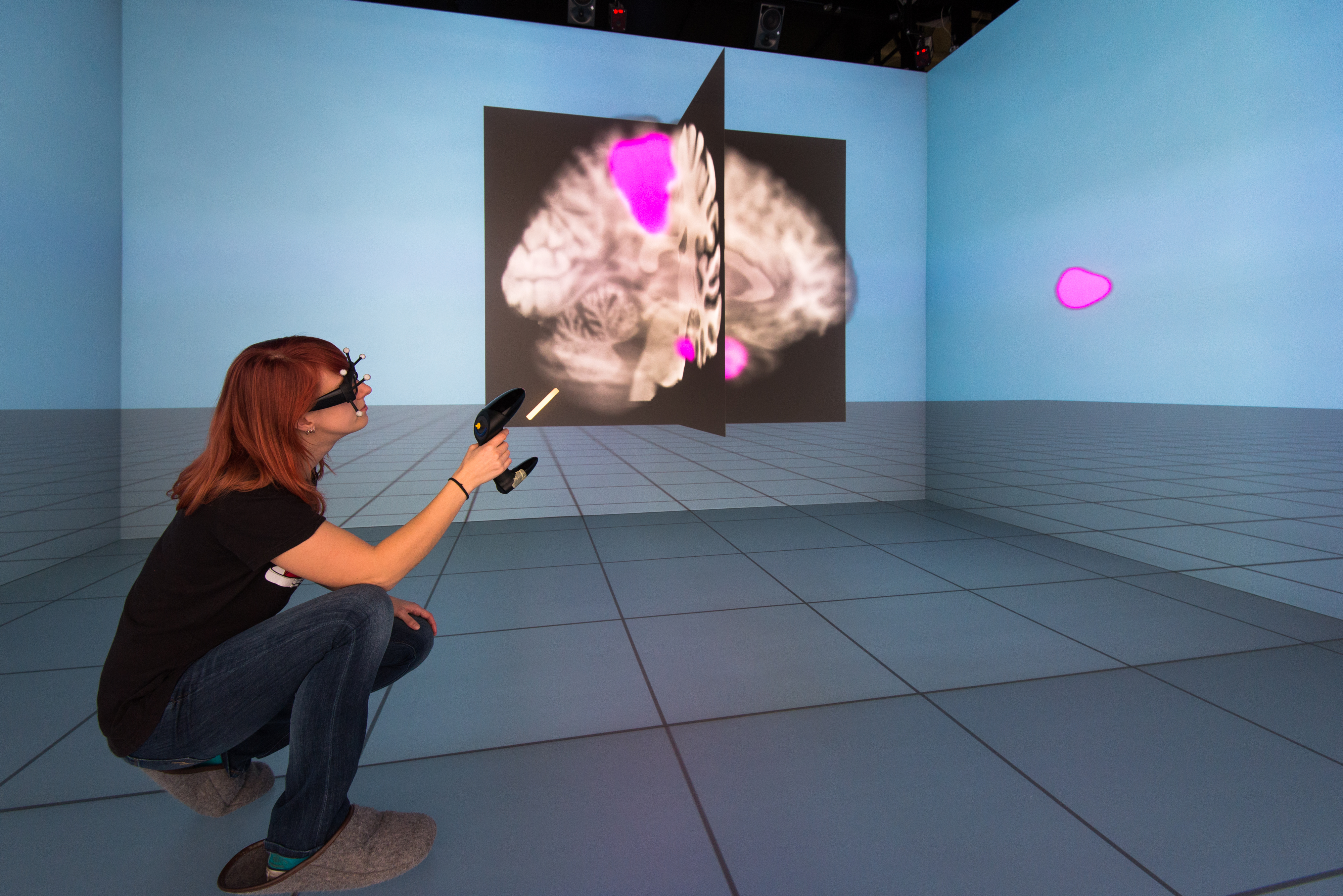

Design and Evaluation of Data Annotation Workflows for CAVE-like Virtual Environments

TVCG Abstract: Data annotation is used in Virtual Reality applications to facilitate the data analysis process, e.g., architectural reviews. While many annotation systems have been presented, we observe that the process of metadata handling is often not covered thoroughly. To improve this situation, we present an annotation workflow that supports the creation, access, and modification of annotation contents in a flexible fashion. As an initial evaluation, we performed a user study in a CAVE-like virtual environment which compared our design to two alternatives in terms of an annotation creation task. Our design obtained good results in terms of task performance and user experience.

Abstract: Data annotation is used in Virtual Reality applications to facilitate the data analysis process, e.g., architectural reviews. While many annotation systems have been presented, we observe that the process of metadata handling is often not covered thoroughly. To improve this situation, we present an annotation workflow that supports the creation, access, and modification of annotation contents in a flexible fashion. As an initial evaluation, we performed a user study in a CAVE-like virtual environment which compared our design to two alternatives in terms of an annotation creation task. Our design obtained good results in terms of task performance and user experience.

Examining Rotation Gain in CAVE-like Virtual Environments

TVCGAbstract: In this work, we examined the effects of rotation gain in a CAVE-like virtual environment. The results show no significant effects of rotation gain within the range of [0.85;1.18] on simulator sickness, presence, or user performance in a cognitive task, but indicate that there is a negative influence on spatial knowledge for inexperienced users. Furthermore, we could show a negative correlation between simulator sickness and presence, cognitive performance and spatial knowledge, a positive correlation of presence and spatial knowledge, a mitigating influence of previous experience on simulator sickness, and a higher incidence of simulator sickness in women.

Visual Quality Adjustment for Volume Rendering in a Head-Tracked Virtual Environment

TVCGAbstract: In this work, we evaluate the trade-off between visual quality and latency in volume rendering applications for virtual environments. Therefore, we present results of a controlled user study. Search and count tasks were performed with varying volume rendering conditions applied according to viewer position updates. Our results indicate that participants preferred continuous adjustment of the visual quality over an instantaneous adjustment that guaranteed for low latency and over no adjustment providing constant high visual quality but rather low frame rates. Within the continuous condition, participants showed best task performance and felt less disturbed by effects of the visualization during moving.

Extrafoveal Video Extension for an Immersive Viewing Experience

TVCG PresentationAbstract: Between the recent popularity of virtual reality (VR) and the development of 3D, immersion has become an integral part of entertainment concepts. Head-mounted Display (HMD) devices are often used to afford users a feeling of immersion in the environment. Another technique is to project additional material surrounding the viewer, as is achieved using cave systems. As a continuation of this technique, it could be interesting to extend surrounding projection to current television or cinema screens. The idea would be to entirely fill the viewer’s field of vision, thus providing them with a more complete feeling of being in the scene and part of the story. The appropriate content can be captured using large field of view (FoV) technology, using a rig of cameras for 110◦ to 360◦ capture, or created using computer generated images. The FoV is, however, rather limited in its use for existing (legacy) content, achieving between 36 to 90 degrees (◦) field, depending on the distance from the screen. This paper seeks to improve this FoV limitation by proposing computer vision techniques to extend such legacy content to the peripheral (extrafoveal) vision without changing the original creative intent or damaging the viewer’s experience. A new methodology is also proposed for performing user tests in order to evaluate the quality of the experience and confirm that the sense of immersion has been increased. This paper thus presents: i) an algorithm to spatially extend the video based on human vision characteristics, ii) its subjective results compared to state-of-the-art techniques, iii) the protocol required to evaluate the quality of the experience (QoE), and iv) the results of the user tests.

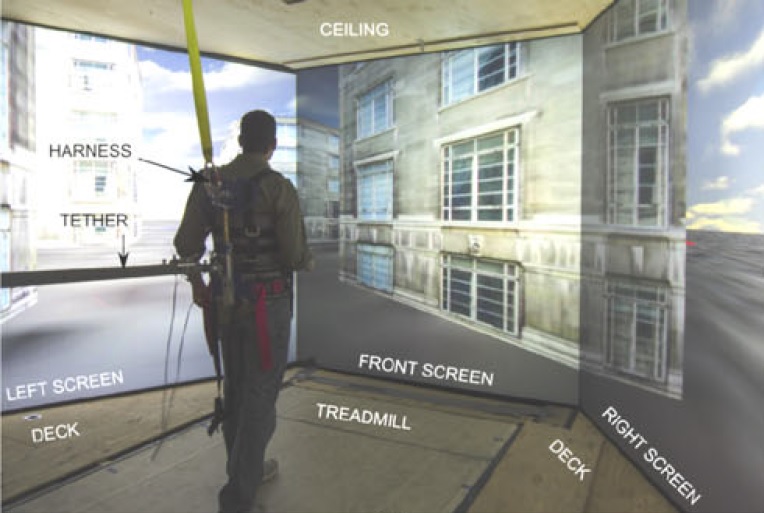

A Full Body Steerable Wind Display for a Locomotion Interface

TVCG PresentationAbstract: —This paper presents the Treadport Active Wind Tunnel (TPAWT)-a full-body immersive virtual environment for the Treadport locomotion interface designed for generating wind on a user from any frontal direction at speeds up to 20 kph. The goal is to simulate the experience of realistic wind while walking in an outdoor virtual environment. A recirculating-type wind tunnel was created around the pre-existing Treadport installation by adding a large fan, ducting, and enclosure walls. Two sheets of air in a non-intrusive design flow along the side screens of the back-projection CAVE-like visual display, where they impinge and mix at the front screen to redirect towards the user in a full-body cross-section. By varying the flow conditions of the air sheets, the direction and speed of wind at the user are controlled. Design challenges to fit the wind tunnel in the pre-existing facility, and to manage turbulence to achieve stable and steerable flow, were overcome. The controller performance for wind speed and direction is demonstrated experimentally.

1:45 pm – 3:15 pm

Applications

Session Chair: John Quarles

Taking Immersive VR Leap in Training of Landing Signal Officers

TVCGAbstract: A major training device used to train all Landing Signal Officers (LSOs) has been two-stories tall simulator called Landing Signal Officer Trainer, Device 2H111. LSOs typically encounter this system for only six one-hour long sessions, leaving multiple gaps in training. This paper details our efforts on designing, buidling and testing a lightweight VR prototype training system that uses commercial off-the-shelf solutions, and provides LSOs with an unlimited number of training opportunities unrestricted by location and time. The results achieved in this effort indicate that the time for LSO training to make the leap to immersive VR has decidedly come.

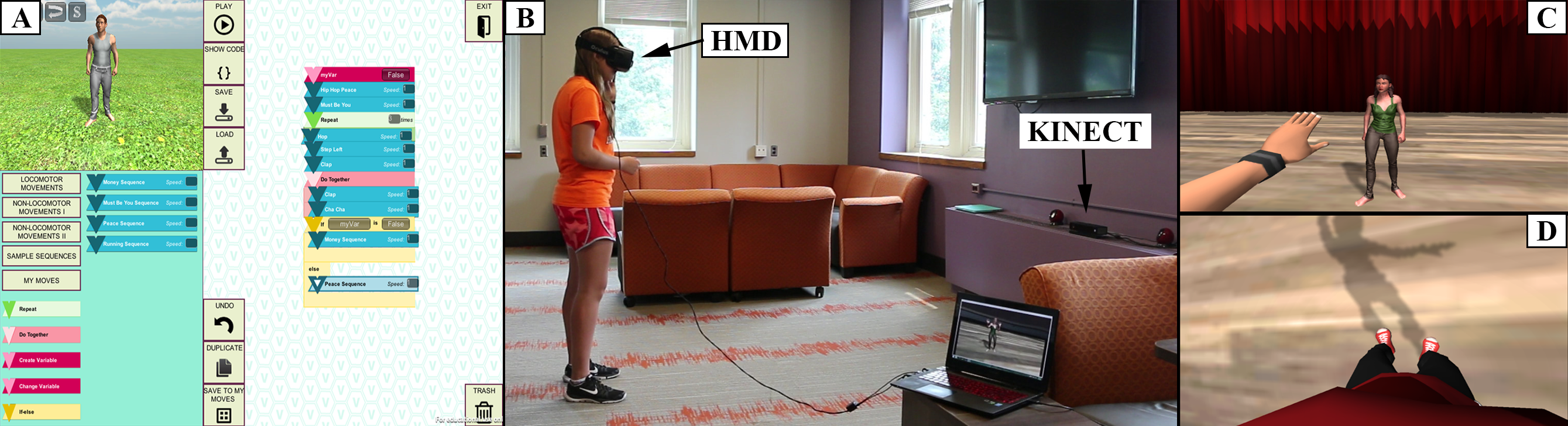

Programming Moves: Design and Evaluation of Applying Embodied Interaction in Virtual Environments to Enhance Computational Thinking in Middle School Students

IEEE VR proceedings Abstract: We detail the design, implementation, and initial evaluation of a virtual reality education and entertainment application called Virtual Environment Interactions (VEnvI), in which students learn computer science concepts through the process of choreographing movement for a virtual character using a fun and intuitive interface. Participants programmatically crafted a dance performance for a virtual human and participated in an immersive embodied interaction metaphor in VEnvI. We qualitatively and quantitatively evaluated the extent to which the activities within VEnvI facilitated students’ edutainment, presence, interest, excitement, and engagement in computing, and the potential to alter their perceptions of computing and computer scientists.

Abstract: We detail the design, implementation, and initial evaluation of a virtual reality education and entertainment application called Virtual Environment Interactions (VEnvI), in which students learn computer science concepts through the process of choreographing movement for a virtual character using a fun and intuitive interface. Participants programmatically crafted a dance performance for a virtual human and participated in an immersive embodied interaction metaphor in VEnvI. We qualitatively and quantitatively evaluated the extent to which the activities within VEnvI facilitated students’ edutainment, presence, interest, excitement, and engagement in computing, and the potential to alter their perceptions of computing and computer scientists.

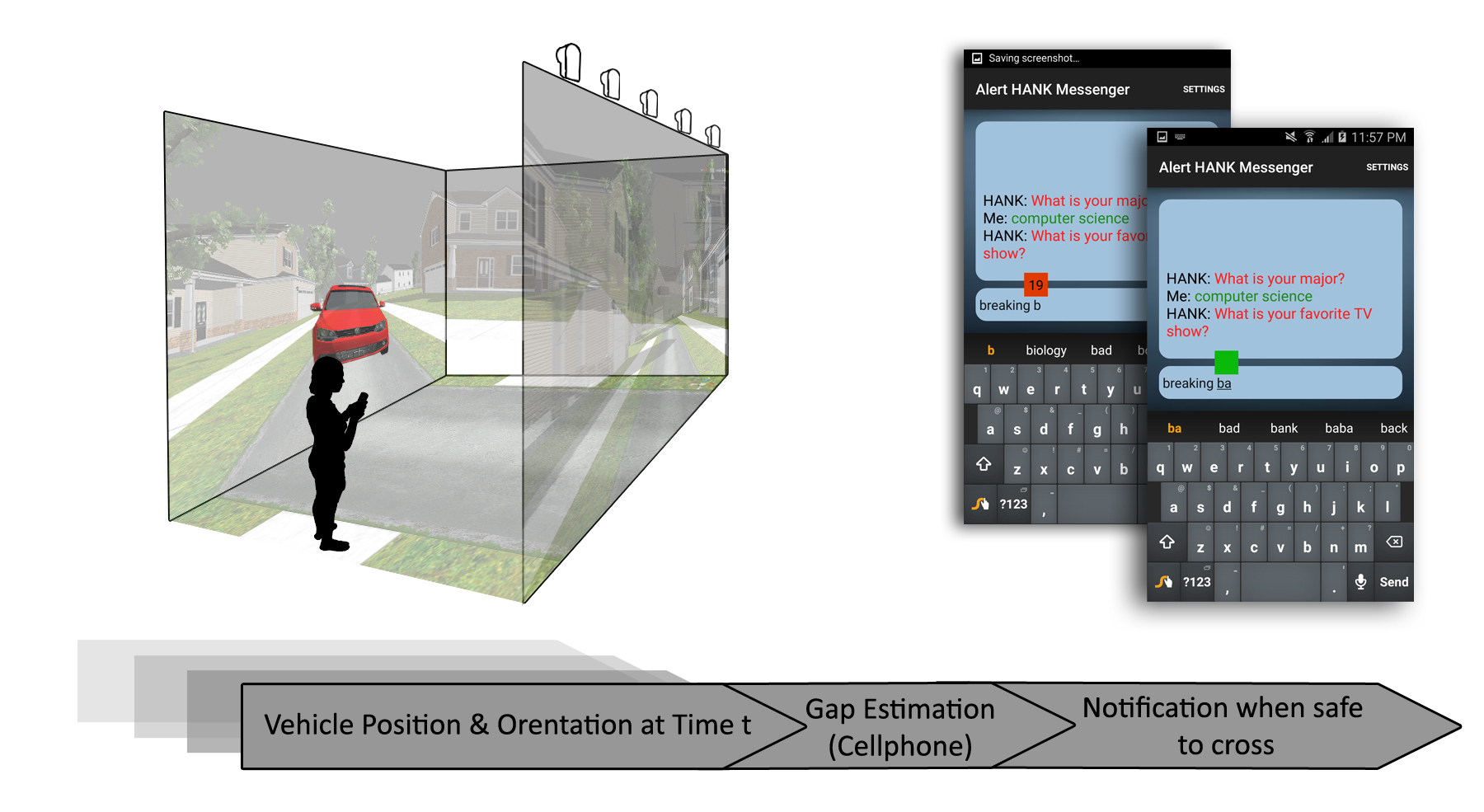

Using a Virtual Environment to Study the Impact of Sending Traffic Alerts to Texting Pedestrians

IEEE VR proceedingsAbstract: This paper presents an experiment conducted in a large-screen virtual environment to evaluate how texting pedestrians respond to permissive traffic alerts delivered via their cell phone. We compared gap selection and movement timing in three conditions: texting, texting with alerts, and no texting (control). Participants in the control and alert groups chose larger gaps, were more discriminating in their gap choices, and had more time to spare than participants in the texting group. The alert group also paid the least attention to the roadway. The results demonstrate the potential and risks of Vehicle-to-Pedestrian (V2P) communications technology for mitigating pedestrian-vehicle crashes.