- Home

- Program

- Presenter Info

- Call for Participation

- Venue

- Registration

- Committees

- Past Conferences

- IEEE 3DUI 2013

- ACM i3D 2013

FLAVRS Demonstrations

Quick Links

- FD-001: MEteor

- FD-002: Knightrider

- FD-003-1: MR Window

- FD-003-2: 3D Face Capture

- FD-004: Physical-Virtual Avatar

- FD-005: TeachLive

- FD-006: The Great World's Fair

- FD-007: Emerging Tech. Integration

- FD-008: Game-Based Training

- FD-009: Interactive Tools for Learning

- FD-010: Disconnect 2 Drive

- FD-011: Anxiety Disorders

- FD-012: Decision Support AR

- FD-013: 3D Model Acquisition

- FD-014: Full Body Gesture Rec.

- FD-015: UAV Gesture Interface

- FD-016-1: Neurological Exam Research

- FD-016-2: Prostate Exam Simulator

- FD-016-3: Peds

- FD-016-4: Animatronic Digital Avatar

- FD-017: Surgery Illustration Toolkit

- FD-018: Mixed Phys. &s; Virt. Sims

- FD-020: Laparoscopic Trainer

- FD-021-1: Avatars4Change

- FD-021-2: Ambient Avatars

- FD-021-3: TeamVis Debriefing System

- FD-022: VR Job Training

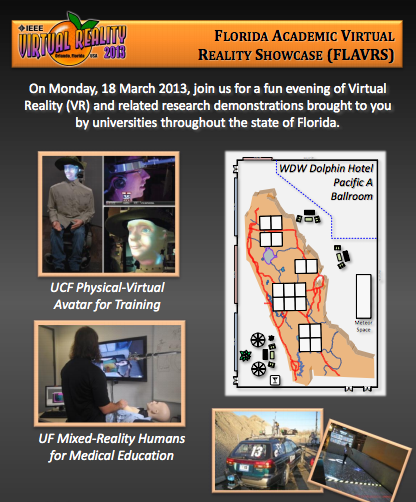

On Monday, 18 March 2013, join us for a fun evening of Virtual Reality (VR) and related research demonstrations brought to you by universities throughout the state of Florida! In the context of the conference, we would like to showcase a representative sample of Florida research groups to emphasize the importance of the region in VR-related research efforts. The showcase will be the highlight of the evening on Monday, March 18 and provide an opportunity to interact with a diverse community of researchers and practitioners. In addition, the event is established with an aim to reach a broader audience by opening it to family members of the conference participants and selected local professionals.

What?

Florida Academic Virtual Reality Showcase (FLAVRS): An evening of VR and related research demonstrations brought to you by universities throughout the state of Florida, in an appropriately themed festive and engaging atmosphere. Reception food for VR attendees, and cash bar that is available to all.

When?

Monday evening; 18 March, 2013; between 6 – 9 p.m.

Where?

Walt Disney World Dolphin Hotel (adjacent to the WDW Swan Hotel), Pacific A Ballroom and Terrace

Who?

The event is open to the public and free without food. Registered VR 2013 attendees will have access to reception food and a cash bar. Other adults (not registered for VR 2013) may purchase reception food/drink tickets on site for $40 each, and/or purchase drinks from the cash bar. All children will have access to a “Kids Eat Free” table (free).

Thanks!

The FLAVRS event is sponsored by the University of Central Florida (Institute for Simulation & Training, College of Engineering & Computer Science, Office of Research and Commercialization, EECS Division of Computer Science) and the University of Florida. The effort to organize the event is led by the FLAVRS Chairs:

- Remo Pillat, University of Central Florida

- Aleshia Hayes, University of Central Florida

We would like to express special thanks to all FLAVRS Volunteers that have been devoting their time to making the event a success:

- Arjun Nagendran, University of Central Florida

- Mike Eakins, University of Central Florida

- Lijuan 'Alice' Yin, University of Central Florida

- Yiyan 'Lucy' Xiong, University of Central Florida

- Ryan Patrick, University of Central Florida

- Rongkai Guo, University of Texas at San Antonio

- Khrystyna Vasylevska, Vienna University of Technology, Austria

- Denise Benkert, University of Central Florida

- Tarah Schmidt, University of Central Florida

- Rob Michlowitz, University of Central Florida

- Paul Hayes, Indiana Institute of Technology

- Aaron Koch, University of Central Florida

- Kelly Vandegeer, University of Central Florida

- Kristianna Pillat, University of Central Florida

- Lori Walters, University of Central Florida

Demonstrations

FD-001: MEteor (UCF)

- Presenters/Organizers: Dr. Robb Lindgren, Dr. Charles E. Hughes

- Demo Description

MEteor is a space for facilitating whole-body metaphors where learners use the physical movement and positioning of their entire bodies to enact their understanding of complex concepts. Multi-projector blending and laser-based tracking is used to create a large, seamless interaction area on the floor that affords a human-scale interaction environment. MEteor is focused on planetary astronomy and a few select principles of physics typically introduced to students in middle school. Rather than having learners experience these principles as external observers as they are typically taught in school, a simulation that allows a participant to become part of the planetary system is created. - Research Lab Description

The Media & Learning Lab is part of the School of Visual Arts & Design at the University of Central Florida. Our mission is to design and study emerging media technologies that enhance people's learning and reasoning. Faculty and students in our lab are collaborating to explore applications in immersive virtual worlds, video games, online and mobile media in a research and development oriented environment. - Video Link

http://vimeo.com/49391732

FD-002: Knightrider Robot Car (UCF)

- Presenters/Organizers: Don Harper

- Demo Description

We present “Knightrider”, the UCF robot car that participated in the DARPA Grand Challenge 2005 and the DARPA Urban Challenge in 2007. In the latter, our team placed 7th out of an initial field of 89 competitors. You can get an up-close look what sensing, actuation, and computing components are necessary to allow fully autonomous driving in urban traffic scenarios. - Research Lab Description

- Video Link

http://www.youtube.com/watch?v=FIPAz7cXyP8

FD-003-1: Mixed-Reality Window (UCF)

- Presenters/Organizers: Steven Braeger

- Demo Description

By using a series of gradient color patterns, we calibrate the total non-linear mapping function between the color-space of the real-world, a display, and a camera. This allows us to correct the color distortions often found in Mixed-Reality (MR) applications that are caused by a display device. This correction improves the immersion of many MR applications when they are embedded in the real world. - Research Lab Description

(Please see FD-004)

FD-003-2: 3D Face Capture (UCF)

- Presenters/Organizers: Steven Braeger

- Demo Description

We demonstrate a device capable of capturing highly dense and accurate normal-maps and 3D surface information of real-world objects using off-the shelf parts. Using polarized photometric stereo, our device can produce 3D data with significantly higher fidelity than other devices of similar cost, such as the Kinect. - Research Lab Description

(Please see FD-004)

FD-004: Physical-Virtual Avatar (UCF)

- Presenters/Organizers: Dr. Greg Welch

- Demo Description

Physical-virtual humans combine work in physical animatronics and virtual humans to achieve virtual humans with physical manifestations. Applications included telepresence and training, with a range of human to autonomous agency. We have invited an engaging young boy named "Sean" to attend FLAVRS using the PVA. Sean loves interacting with interesting adults, so please seek him out at the event. He will be roving around on an electric scooter, so watch out! - Research Lab Description

The Synthetic Reality Laboratory (SREAL) team consists of five faculty researchers, thirteen affiliated faculty members, sixteen PhD students, six undergraduate research assistants two full-time artists (modelers/animators), and a network of campus and institute collaborators. The lab's research activities include interaction with human surrogates (virtual and physical/virtual); robotics; motion tracking/capture; computer graphics; image processing; digital avateering; experiential learning; and digital heritage. - Video Link

http://goo.gl/7QcMC

FD-005: TeachLive (UCF)

- Presenters/Organizers: Dr. Charles Hughes

- Demo Description

Did you ever want to try your skills at teaching in the hardest environment ever conceived -- a middle school classroom? TeachLive will give visitors the opportunity to teach class to five virtual middle school students. The visitor can walk freely through the classroom, having a dialogue with the class or individuals within it. - Research Lab Description

(Please see FD-004) - Video Link

http://www.youtube.com/watch?v=ajKoueQ1rHs&feature=youtu.be

FD-006: The Great World's Fair Adventure (UCF)

- Presenters/Organizers: Dr. Lori C. Walters

- Demo Description

ChronoLeap explores the use of historical virtual environments as a learning landscape for STEM education. Stop-by, try on a pair of passive 3D glasses and visit the 1964/65 New York World's Fair! - Research Lab Description

(Please see FD-004) - Media Link

http://www.chronoleap.com

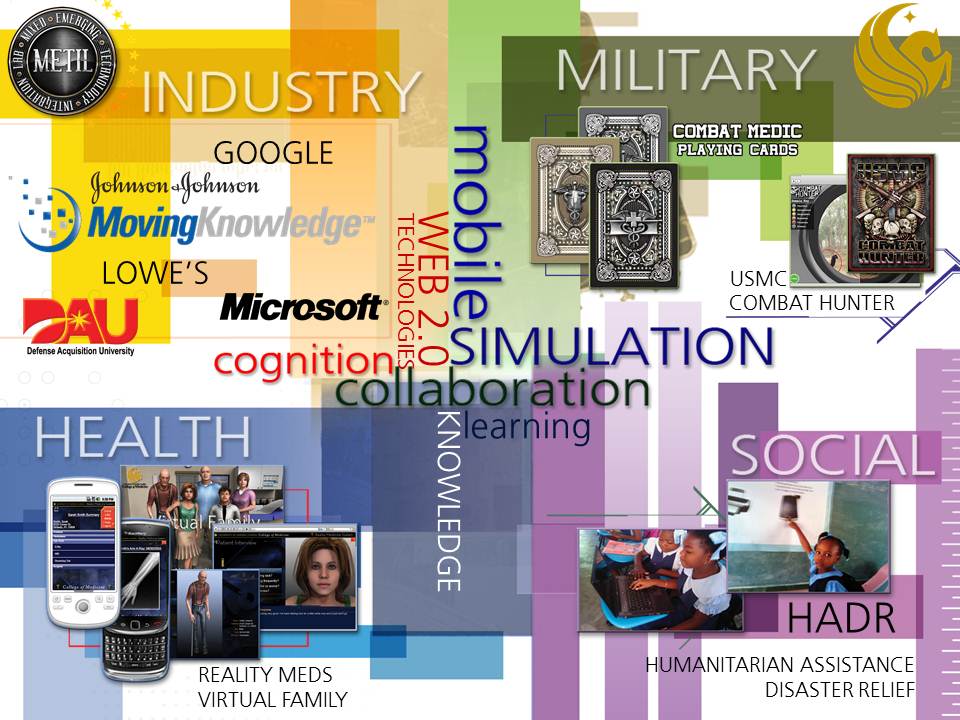

FD-007: Mixed Emerging Technology Integration (UCF)

- Presenters/Organizers: Dr. David Metcalf

- Demo Description

METIL has partnered with leaders from Industry, Academia, and the Military to create resources and develop innovative solutions with mobile technology and Web 2.0 applications. We will be showcasing our lab’s latest projects, including demonstrations of AR Army Combat Medic Cards, 3D medical models, and much more. Our crash cart with mobile peripherals will also be on display. - Research Lab Description

The Mixed Emerging Technology Integration Lab was founded by Dr. David Metcalf in 2006 at the University of Central Florida's Institute for Simulation & Training. METIL relies on an experienced research team comprised of doctoral and post-doctoral fellows who in turn manage dozens of graduate and undergraduate students in the fields of Computer Science, Digital Media, Internet Interactive Systems, and Learning & Cognition. Leveraging the resources of one of the largest universities in the United States, METIL is able to provide research & development expertise for our partners in the public, private, and social sectors. See http://www.metillab.com for more information.

FD-008: Game-Based Training and Scenario Generation (UCF)

- Presenters/Organizers: Dr. Glenn Martin

- Demo Description

We plan to show a cross-section of our work. Over the years, our virtual environments research has gone from fully-immersive VR pods to game-based systems to mobile tablets. A thread throughout has been applying these technologies to training. We present a demonstration of our mobile virtual environment research in Man vs. Minotaur Alligator, a Florida-themed version of a mythology-oriented experience. Our game-based research is shown through the Game-based Performance Assessment Battery (GamePAB), which measures a user’s capabilities in the basic operations of using a serious game for training. Finally, we present our research into semi-automated scenario generation and after action review to highlight making training environments more intelligent and adaptive, and show one of our haptic-based part-task trainers for combat medics. - Research Lab Description

The Interactive Realities Laboratory (IRL) at the University of Central Florida's Institute for Simulation and Training researches areas within virtual and augmented reality with a focus on environments that are dynamically changing, user and world alterable and distributed. Specifically, we pursue research in multi-modal simulation (both virtual and physical), game-based learning, adaptive training, competitive learning, interactive high performance computing, and applied research. Ultimately, our goal is to make more adaptive and intelligent training environments and tools.

• FD-009: Interactive Tools for Learning (UCF)

- Presenters/Organizers: Eileen Smith

- Demo Description

E2i Creative Studio brings four interactive simulations for attendees to explore. A two-player Hurricane Simulation allows one player to build a house, prep it for a storm and see how it fares. The other player creates a storm, then makes dynamic changes to it as it heads toward land. The Evolution Simulation shows how species evolve based on locale and food source. “Gravitational Forces” begins with a primer on gravity, mass and orbit, then allows the player to launch objects into a solar system with varying parameters; can the object be put successfully in orbit? All four of these learning simulations are designed to prompt rich discussion about both the particular actions on screen, and also related science topics stimulated by the screen play. - Research Lab Description

E2i Creative Studio is a bridge between academia and industry, with applied research and production projects that explore human performance in applications as diverse as medical, military and entertainment. We use emerging technologies to research their impact on human performance. We partner with diverse industries, organizations and individuals to collectively drive further than we could on our own, with projects ranging from experiential learning in museums to training and rehabilitation. We partner with global commercial firms in order to push applied media innovations into real-world experiential venues.

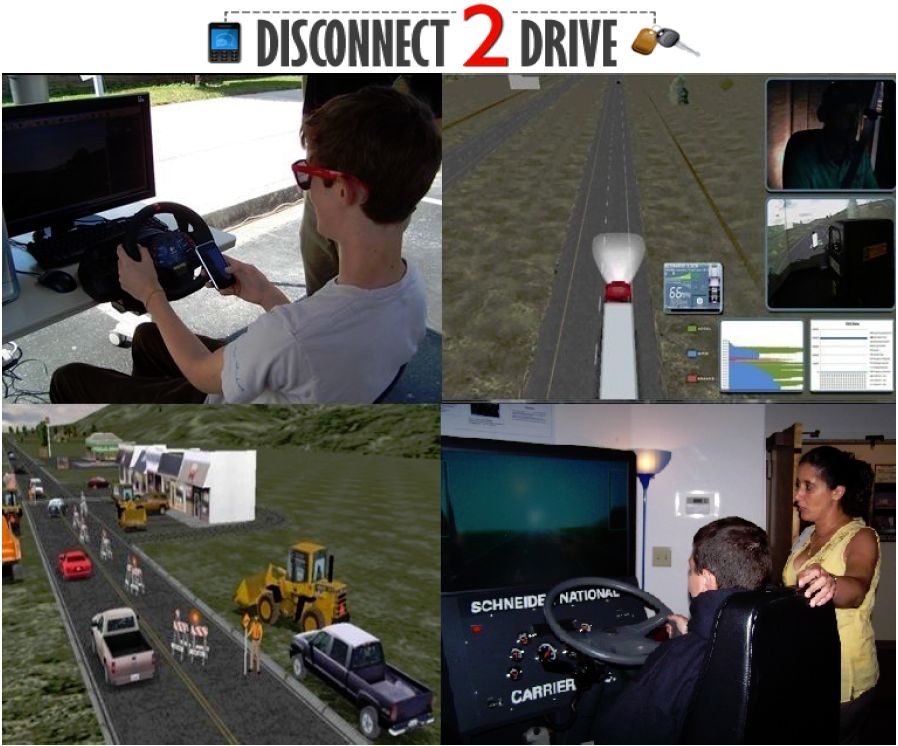

• FD-010: Disconnect 2 Drive (UCF)

- Presenters/Organizers: Dr. Ron Tarr, Tim Forkenbrock

- Demo Description

The VS II is a computer-controlled simulator designed to teach trainees in a classroom environment the skills they need to safely operate a vehicle. Training on the VS II, the trainee learns how to drive under various situations in the safety and convenience of the classroom. In the Distracted Driving scenario, the trainee will learn the dangers and level of difficulty of driving through real world scenarios under the influence of a distraction. In addition to the driving simulation the RAPTER team will be providing a slide show of current research areas and projects. - Research Lab Description

The Research in Advanced Performance Technology and Educational Readiness (RAPTER) lab at UCF’s Institute for Simulation and Training (IST) goal is to enhance human performance through the use of appropriate technologies and strategies. These technologies and strategies include: simulation, computer- and web-based training, decision support aids, workshops, and traditional methods. As human performance technologists we look at how people perform as individuals, in teams, and within organizations and work with a variety of domains, sponsors, and content areas to find the right solution to any challenge. Designed to answer universal needs, these solutions can be incorporated across disciplines and domains. - Video Link

http://disconnect2drive.rapter.ist.ucf.edu/

FD-011: Anxiety Disorder Research (UCF)

- Presenters/Organizers: Dr. Deborah Beidel

- Demo Description

There will be three demonstrations. The first is Pegasus School, a newly created virtual environment in which children with social anxiety may practice their newly acquired social interaction skills. Pegasus school, developed in collaboration with Virtually Better, Inc (Atlanta, GA) runs on a desktop computer. The second demonstration is Lift Off!, a newly developed iPad application for the treatment of selective mutism (an anxiety-based childhood disorder). The third demonstration is Virtual Iraq, developed by Skip Rizzo and the Institute of Creative Technologies at the University of Southern California. The UCF team is one of only two programs in the country to be engaged in an empirical evaluation of this HMD-based virtual environment for the treatment of combat-related PTSD. - Research Lab Description

The Center for Trauma, Anxiety, Resilience and Prevention (CTARP) is dedicated to all facets of anxiety disorders and post-traumatic stress disorder (PTSD) including etiology, psychopathology, treatment, prevention and resilience initiatives. A unique feature of CTARP is the development and use of established and emerging technologies to enhance prevention and intervention efforts. Our efforts focus on virtual reality, telehealth, smartphone and IPad technologies to enhance more traditional intervention and delivery strategies. Current CTARP initiatives include programs to address post-traumatic stress disorders, childhood social anxiety disorder, and selective mutism.

FD-012: Decision Support and Context-Aware AR environments (UCF)

- Presenters/Organizers: Dr. Amir Behzadan

- Demo Description

We are planning to show live demos of a prototype augmented reality application we are currently developing for construction and civil engineering education. Attendees can also use their tablet devices or smartphones to view and interact with the 3D context-aware graphics (2D and 3D models, animations) and multimedia (images, videos, sounds) content superimposed on top of real book pages, and experience first-hand how students and trainees can benefit from learning abstract engineering topics through interacting with superimposed visual information. In addition, we will showcase our latest research findings in data-driven simulation and visualization of construction operations. - Research Lab Description

The Decision Support, Information Management, and Automation Laboratory (DESIMAL) is a research lab in the Department of Civil, Environmental, and Construction Engineering at UCF. We are specializing in simulation, data mining, and visualization of construction and civil engineering operations. Currently, we are pursuing research in (1) data-driven stochastic simulation of unstructured construction systems, and (2) context-aware augmented reality environments.

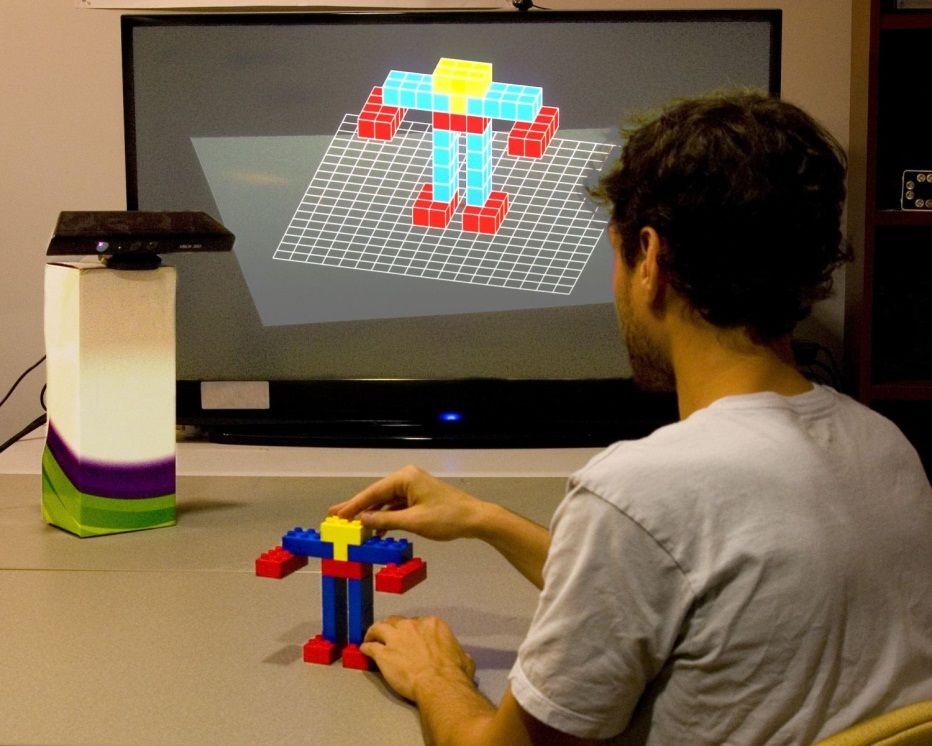

FD-013: 3D Model Acquisition and Tracking of Building Block Structures (UCF)

- Presenters/Organizers: Dr. Joe LaViola

- Demo Description

We present a prototype system for interactive construction and modification of 3D physical models using building blocks. Our system uses a depth sensing camera and a novel algorithm for acquiring and tracking the physical models. The algorithm, Lattice-First, is based on the fact that building block structures can be arranged in a 3D point lattice where the smallest block unit is a basis in which to derive all the pieces of the model. The algorithm also makes it possible for users to interact naturally with the physical model as it is acquired, using their bare hands to add and remove pieces. - Research Lab Description

The Interactive Systems and User Experience Lab’s mission is to develop innovative techniques, tools, and applications that improve the overall experience between humans and machines. More specifically, we are interested in the creation and evaluation of advanced interfaces that support education, entertainment, and general work productivity. We focus on 3D user interfaces for games, training, and entertainment, sketch-based interfaces for STEM education, and human robot interaction. - Video Link

http://www.youtube.com/watch?v=1hDv2zrmypg&list=PLB3323AA5AD9F5252

FD-014: Full Body Gesture Recognition for Video Games (UCF)

- Presenters/Organizers: Dr. Joe Laviola

- Demo Description

We present a video game called Surkour that combines combat and Parkour navigation techniques to fight against a hoard of zombies. Our game is able to recognize over 35 distinct 3D full body gestures with high accuracy using advanced recognition algorithms that exploit what the user is doing during gameplay. - Research Lab Description

(Please see FD-013) - Video Link

http://www.youtube.com/watch?v=ZwNzi5EAUBI&list=PLD4284FE6AC10AA07

FD-015: 3D Gestural Interface Metaphors for Controlling UAVs (UCF)

- Presenters/Organizers: Dr. Joe LaViola

- Demo Description

We present 3D interface techniques using the Microsoft Kinect, based on metaphors inspired by UAVs, to support a variety of flying operations a UAV can perform. Techniques include a first-person interaction metaphor where a user takes a pose like a winged aircraft, a game controller metaphor, where a user’s hands mimic the control movements of console joysticks, “proxy” manipulation, where the user imagines manipulating the UAV as if it were in their grasp, and a pointing metaphor in which the user assumes the identity of a monarch and commands the UAV as such. - Research Lab Description

(Please see FD-013) - Video Link

http://www.eecs.ucf.edu/~jjl/IUI368_1.mp4

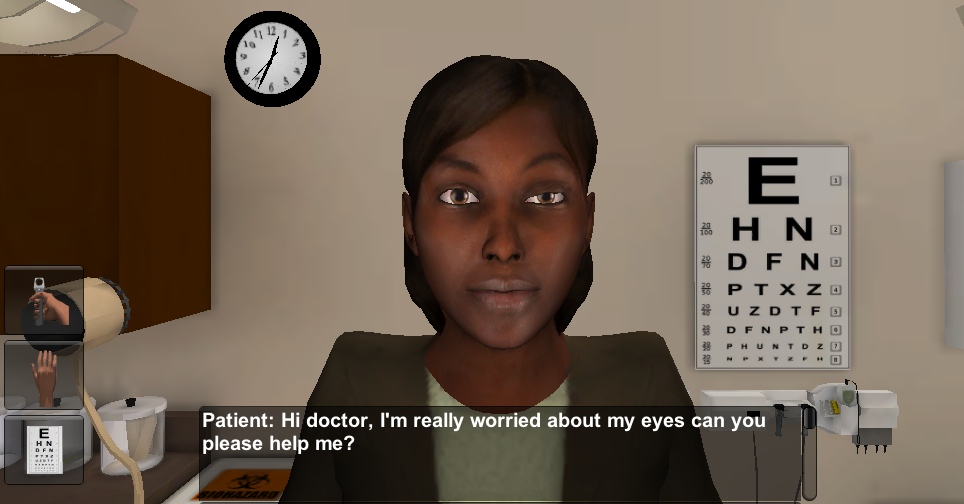

FD-016-1: Neurological Exam Rehearsal (UF)

- Presenters/Organizers: Dr. Ben Lok

- Demo Description

Traditionally, medical students practice interview skills with trained actors, who portray a certain condition during the interview. It is difficult to impossible for actors to simulate abnormal findings such as partial-paralysis of the face or the left and right eye moving independently. These findings can indicate cranial nerve injuries. The Neurological Exam Rehearsal Virtual Environment (NERVE) has been used by over 200 students and is helping ensure that medical students can provide high-quality treatment to patients with abnormal injuries. - Research Lab Description

The goal of the Virtual Experiences Research Group (VERG) at the University of Florida is to facilitate individual training through the use of human-computer interactions via virtual humans.

FD-016-2: Prostate Exam Simulator (UF)

- Presenters/Organizers: Dr. Ben Lok

- Demo Description

We present a simulator design to prepare medical students for a stressful interpersonal experience: performing a prostate exam. Performing a prostate exam is a socially awkward experience which requires technical ability and social skills. Existing exam trainers do an adequate job improving learners' technical abilities, but do not give them an opportunity to improve their social skills needed to reassure patients. The prostate exam simulator is also augmented with force sensors which capture learners' exams, allowing them to receive feedback about their exam. - Research Lab Description

(Please see FD-016-1)

FD-016-3: Peds (UF)

- Presenters/Organizers: Dr. Ben Lok

- Demo Description

Medical students often receive little exposure to certain vulnerable populations such as children or the intellectually disabled. Students often interact with standardized patients (actors that portray having a disease or condition), but standardized patients cannot accurately portray many vulnerable populations. This project explores allowing actors to portray vulnerable populations by using mixed reality virtual human technology. Specifically, we allow an adult to portray a 4 year old child. - Research Lab Description

(Please see FD-016-1)

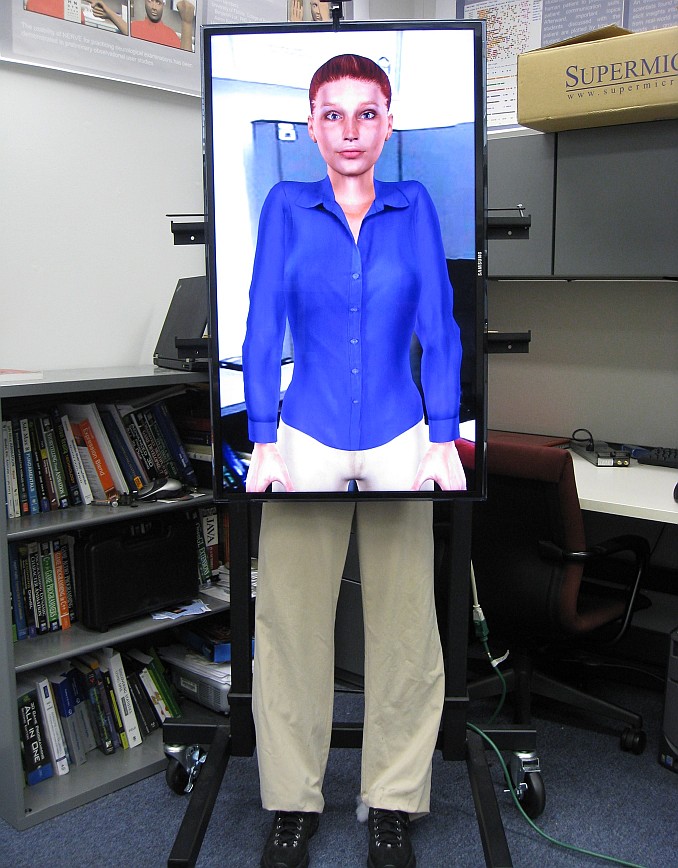

FD-016-4: Animatronic Digital Avatar (UF)

- Presenters/Organizers: Dr. Ben Lok

- Demo Description

Virtual humans have typically lived in purely virtual spaces - on laptops, TVs, HMDs, or CAVES. ANDI (ANimatronic Digital avatar) explores the idea of bringing virtual humans into the physical space. ANDI uses a television for the upper portion (head, torso, and arms) and animatronic parts for the lower portion (legs, feet). ANDI is also low cost (< US$3000 including a laptop) and easy to transport. We have used ANDI in experiments looking at how ANDI's ability to occupy the physical space affects social presence that is the perception and treatment of ANDI as a real person. - Research Lab Description

(Please see FD-016-1)

FD-017: TIPS - Toolkit for Illustration of Procedures in Surgery (UF)

- Presenters/Organizers: Dr. Jörg Peters

- Demo Description

TIPS is a new collaborative partnership of surgeons and IT researchers leveraging low-cost hardware and advanced software. Such illustrations are needed for publishing, documenting, teaching, instruction and continuing education outreach and for legal proceedings involving medical documentation. Our Goal is creating and broadly disseminating a low-cost, computer-based, 3D interactive multimedia authoring and learning environment including force feedback for communication of surgical procedures that places the specialist in control and at the center of content creation. The Approach is based on a unique novel paradigm that differs both from commercial training tools and academic surgery simulation in that it puts the specialist surgeon as the author in control and at the center of all content creation. This will open up a completely new channel of communication and outreach between surgical specialist and practicing surgeons or surgeons-in-training (residents). - Research Lab Description

The SurfLab pursues research in geometric modeling and its application to areas such as rapid design of complex geometry, 3D Graphics, and Scientific Visualization as well as computing and real time simulation on such models.

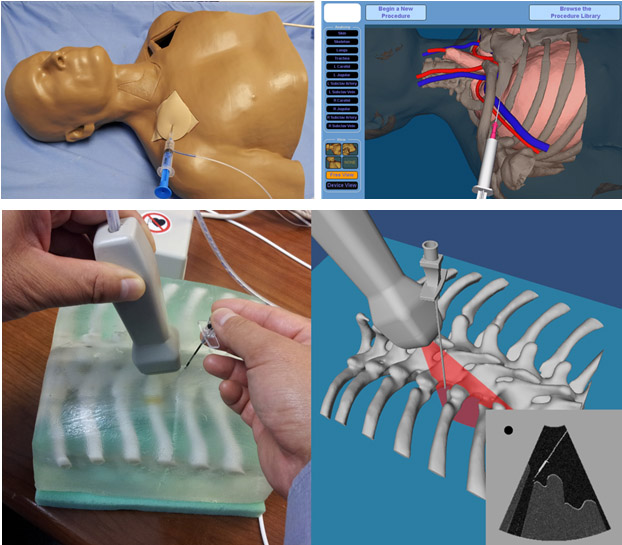

FD-018: Mixed Physical and Virtual Simulations for Medical Applications (UF)

- Presenters/Organizers: Dr. Samsun Lampotang

- Demo Description

The Virtual Anesthesia Machine (VAM) team, affiliated with CSSALT, offers a portfolio of web-enabled simulations at http://vam.anest.ufl.edu/wip.html. In recent years, our research efforts have focused on combining physical and virtual simulations to create a set of mixed simulators for training in medical equipment use, central venous access, ventriculostomy and regional anesthesia. We will bring 2 mixed simulators to the event: one for central venous access and one for regional anesthesia. - Research Lab Description

The Center for Safety, Simulation & Advanced Learning Technologies, CSSALT, provides education, training and services to residents, faculty, clinical personnel and staff throughout the UF Academic Health Center including the UF&Shands Healthcare system, to clinicians in the state of Florida, to UF medical, veterinary, dental, engineering and other health profession students, to local and regional emergency personnel and to industry executives and personnel worldwide. CSSALT builds on the legacy of a continuous and sustained R&D effort in simulation in healthcare that began in 1985 and remains active today in the development and application of simulation technology. The current dean of the UF College of Medicine, Dr. Good and the director of CSSALT, Dr. Lampotang developed in 1987 the first prototype of what would become the UF Human Patient Simulator (HPS) mannequin patient simulator technology that is licensed to CAE/Medical Education Technologies, Inc. (METI) and used worldwide.

FD-020: Laparoscopic Trainer and Spatial Augmented Reality Game Environment (USF)

- Presenters/Organizers: Dr. Yu Sun

- Demo Description

In this showcase, we will demonstrate our recent developments: a VTEI laparoscopic trainer and a spatial augmented game environment (SAGE) for anatomy learning. - Research Lab Description

The students and faculty in the Robot Perception and Action Lab (RPAL) are working on several exciting research projects: Virtually transparent epidermal imagery (VTEI) for MIS, Robotics modeling of skilled hand tasks, Telepresence for medicine, and Robot grasp learning and planning. Our research has been reported by the Discovery Science Channel, Univision, Bay News 9, and other media. - Video Link

http://www.youtube.com/watch?feature=player_embedded&v=fQ6th2iLTNc

FD-021-1: Avatars4Change (USF)

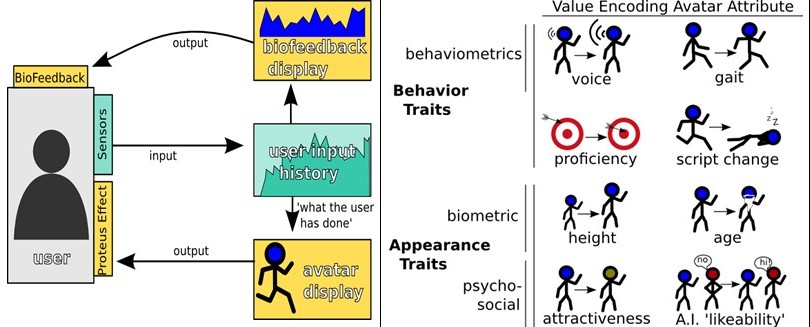

- Presenters/Organizers: Dr. Andrew Raij

- Demo Description

In this demo, attendees will be able to interact with an avatar designed to motivate behavior change. Users will download an application to their Android phone. The application will monitor user physical activity (walking) and display avatar-based visualizations on the phone in response to sensor input. The data will also be transmitted in real-time to a PC connected to a projector. The PC will project a public visualization of activity across all users via their avatars. By default, the system will not transmit identity information. Anonymity will be preserved, unless users opt-in to sharing. - Research Lab Description

The Powerful Interactive Experiences (PIE) Lab focuses on designing, building and evaluating novel PIEs that solve critical societal challenges, such as preventing obesity, reducing stress, and training healthcare professionals. In the process, we make scientific contributions to a variety of fields, including human-computer interaction, virtual reality, sensing, simulation, information visualization, personal informatics, and mobile health.

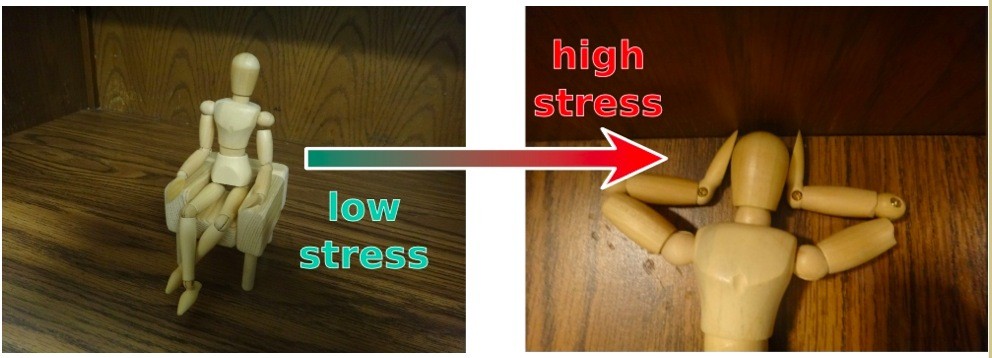

FD-021-2: Ambient Avatars (USF)

- Presenters/Organizers: Dr. Andrew Raij

- Demo Description

In this demo, attendees will interact with an ambient avatar, a miniature figure placed on a table. The avatar provides ambient feedback on the user’s stress level. The figure will raise it arms over its head – a universal symbol of stress - as it detects increasing levels of stress in the user’s voice (via microphone). - Research Lab Description

(Please see FD-021-1)

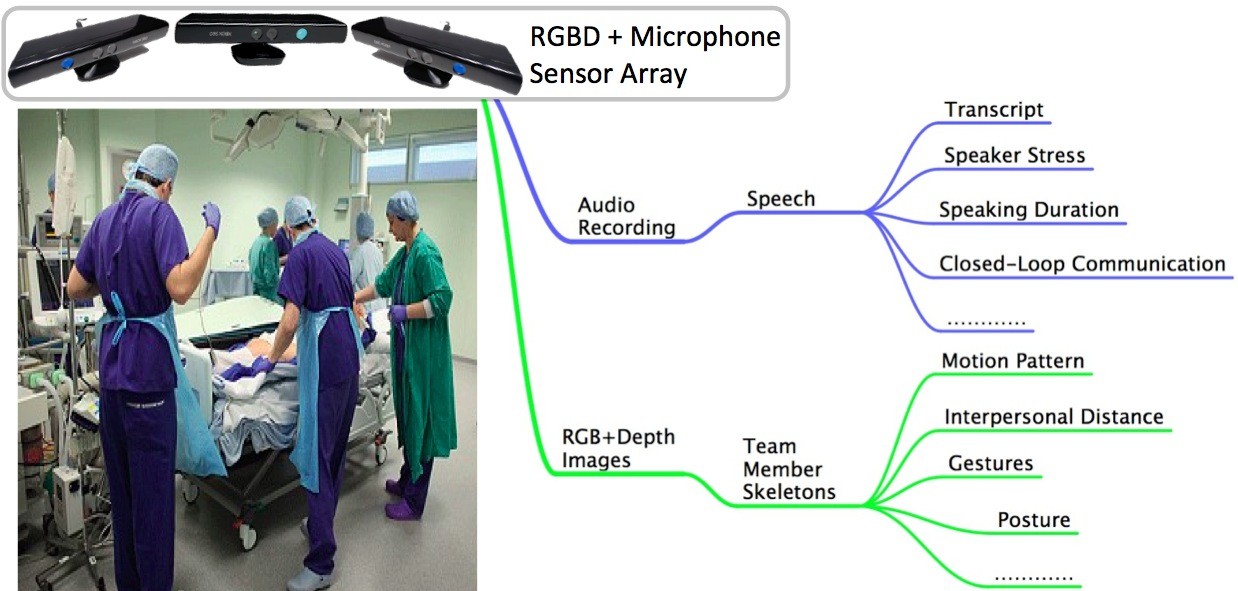

FD-021-3: TeamVis Debriefing System (USF)

- Presenters/Organizers: Dr. Andrew Raij

- Demo Description

In this demo, attendees will use the TeamVis debriefing system to review a real-world medical team training scenario. TeamVis leverages multimodal description of team interaction (captured via a variety of sensors), to facilitate review of objective team performance. - Research Lab Description

(Please see FD-021-1)

FD-022: VR Job Training System (USF)

- Presenters/Organizers: Dr. Chad Wingrave

- Demo Description

We present an early demonstration of a VR system to evaluate and train job seekers with cognitive and physical disabilities. A job trainer will be able to run multiple people through different job settings, away from harm, without the fear of on-the-job failure and without having to travel between sites. Trainers can look for a person's ability to follow commands or react to distractions. - Research Lab Description

The Center for Assistive, Rehabilitation and Robotics Technologies at the University of South Florida (USF) is a multidisciplinary center that integrates research, education and service for the advancement of assistive and rehabilitation robotics technologies. Researchers from various departments and colleges at USF including the College of Engineering, the School of Physical Therapy and Rehabilitation Sciences, the College of the Arts, and the College of Behavioral and Community Sciences collaborate on various projects. Our mission is to improve the quality of life, and increase independence and community reintegration of individuals with reduced functional capabilities due to aging, disability or traumatic injury, as in the case of our wounded warriors, through integrated research, education and service in assistive and rehabilitation technologies in collaboration with consumers, clinicians, government and industry partners.