- Home

- Program

- Presenter Info

- Call for Participation

- Venue

- Registration

- Committees

- Past Conferences

- IEEE 3DUI 2013

- ACM i3D 2013

Research Demonstrations

IEEE Virtual Reality 2013 has an exciting slate of research demonstrations planned for this year.

The research demonstration room (Pelican) will be open from 8:00am to 5:00pm on Monday and Tuesday, and 800am to 2:00 pm on Wednesday.

Demonstrations are expected to be "Live" on Tuesday from 3:00pm to 5:00pm before the banquet.

Demonstration setup is Sunday, all day.

For those presenting research demonstrations, an information flyer is available here.

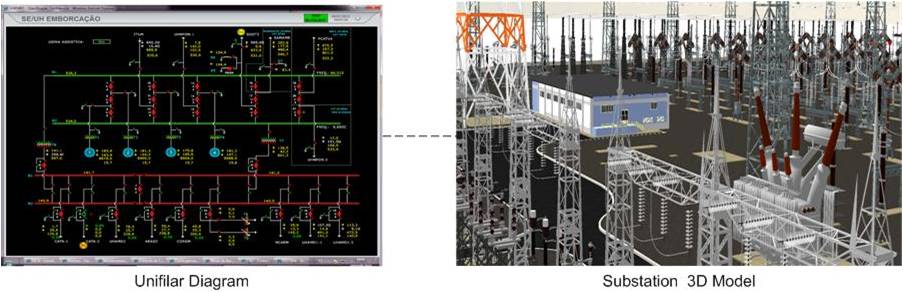

RD-001: VRCEMIG: a Virtual Reality System for Real Time Control of Electric Substations

- Presenters/Organizers: Alexandre Cardoso†, Edgard Lamounier Jr.†, Paulo Prado§

† Faculty of Electrical Engineering at Federal University of Uberlândia (Brazil), § CEMIG Minas Gerais Electric Energy Company (Brazil) - Description: A novel approach to control and operate electric substations by exploring Virtual Reality techniques. A controlled substation is represented by a 3D model which is integrated with the supervision, data acquisition and control system of an energy company. Today, this is pursued on a 2D diagram, lacking intuitiveness. VR principles such as immersion and navigation are provided to support not only training for future operators but also real time operation. This strategy allows the energy company to save time and money during learning phase, since the apprentices can operate all substations only from company´s control center.

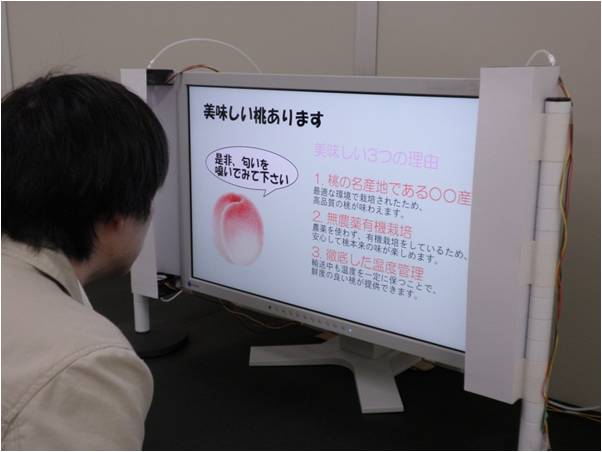

RD-002: Smelling Screen: Presenting a Virtual Odor Source on a LCD Screen

- Presenters/Organizers: Haruka Matsukura, Tatsuhiro Yoneda, Hiroshi Ishida

Tokyo University of Agriculture and Technology (Japan) - Description: The smelling screen is a new olfactory display that can generate a localized odor distribution on a two-dimensional display screen. The generated odor distribution is as if an odor source had been placed on the screen, and leads the user to perceive the odor as emanating from a specific region of the screen. The position of this virtual odor source can be shifted to an arbitrary position on the screen. The user can freely move his/her head to sniff at various locations on the screen, and can experience realistic changes in the odor intensity with respect to the sniffing location.

RD-003: AES-risk: An Environment for Simulation of Risk Perception

- Presenters/Organizers: Vitor Jorge†, Luciana Nedel†, Anderson Maciel†, Jackson Oliveira‡, Frederico Faria§

† Universidade Federal do Rio Grande do Sul (Brazil), ‡ AES Sul (Brazil), § Nexo Art (Brazil) - Description: In this demo we present an immersive virtual reality (VR) simulator to measure risk perception in realistic working environments. It provides the ideal environment to expose people to danger with no chances to cause them any harm. However, whereas in the physical work environment workers have to perform their jobs (primary task) and be safe (secondary task), in a VR simulator they still have to deal with an additional source of cognitive load: the user interface.

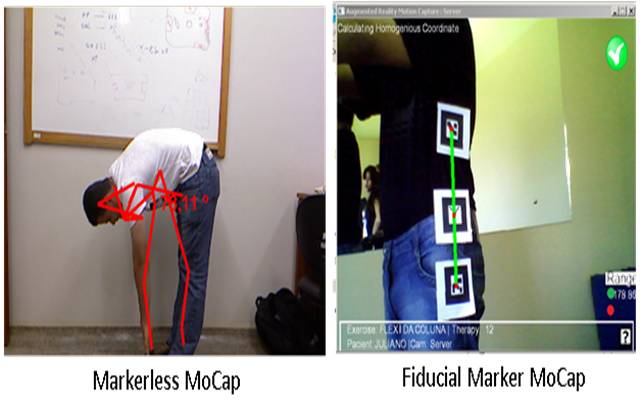

RD-004: An Middleware for Motion Capture Devices applied to Virtual Rehab

- Presenters/Organizers: Eduardo Damasceno, Edgard Lamounier

Computer Graphs Lab at Federal University of Uberlandia (Brazil) - Description: This demonstration allows the visitors to use assorted technologies to motion capture devices for uses in virtual rehabilitation. We presented the MixCap Middleware, developed to blend different types of motion capture technologies and to accomplish in one data format for biomechanical analysis. The Middleware, together with the associated prototypes were developed for use by health professionals who have low cost tracking movement equipment.

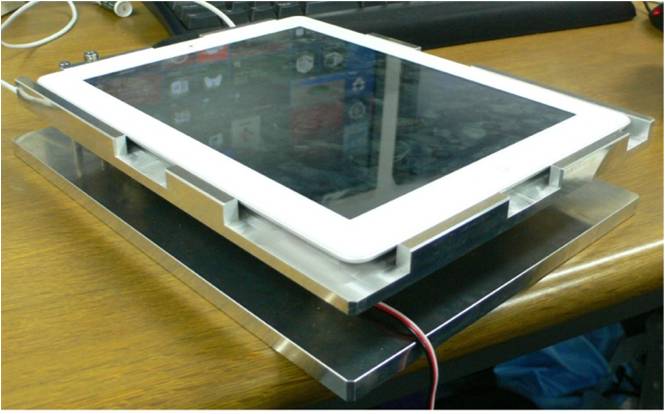

RD-006: An actuated stage for a tablet computer: generation of tactile feedback and communication using the motion of the whole table

- Presenters/Organizers: Itsuo Kumazawa

Imaging Science and Engineering Laboratory at Tokyo Institute of Technology (Japan) - Description: We demonstrate two of our original tactile feedback devices and provide user experiences of their performance. The devices, with a combined use of a tablet computer, are used to constitute a kind of communication robot which has a rotating wheel or an actuated stage to give tactile feedback to the users fingers while the user is performing a gesture operation on the touch panel. The devices are designed to give comfortable tactile feedback with smooth movement actuated by eccentric rotation of a ball bearing. Representation of textures, depth and hardness of the virtual surface is demonstrated as their application.

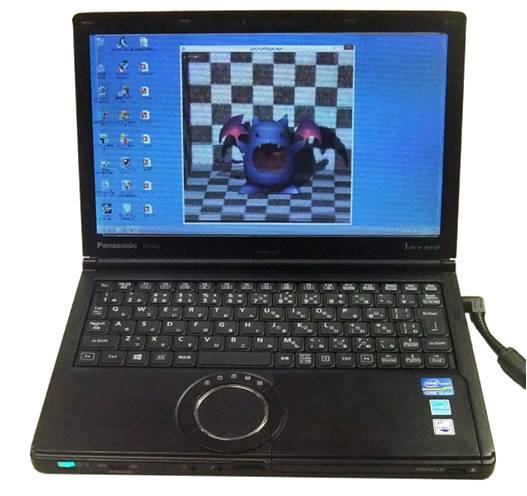

RD-007: Real-time Rendering of Extended Fractional View Integral Photography

- Presenters/Organizers: Sho Kimura, Kazuhisa Yanaka

Kanagawa Institute of Technology (Japan) - Description: A novel real-time visualization system for integral photography is demonstrated, in which autostereoscopic animation of a CG character with not only horizontal but also vertical parallax can be displayed. By introducing the extended fractional view (EFV) method, custom-made fly-lens sheets are not required, and a considerable reduction in cost is possible especially for limited production. The character on the screen can be moved with a gamepad. In this study, we improved the rendering speed considerably by using the GPU of a PC and achieved the real-time processing by introducing an algorithm suitable for shader.

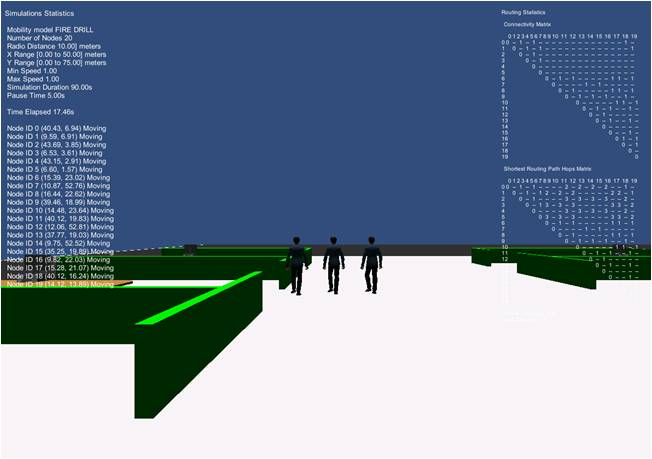

RD-008: Using Game Engine to Enhance Mobility Modeling in Network Simulations

- Presenters/Organizers: Xun Luo

Qualcomm Research (USA) - Description: Mobility modeling is important for network simulation. However, Random Waypoint model and its variants fail to satisfactorily depict mobile users movement patterns with fidelity. Two features of a game engine, namely character AI and pathfinding can be utilized to enhance mobility modeling in network simulations. This demo builds a virtual scene of typical office environment with multiple employees. Movements of the virtualcharacters are firstly generated and visualized using Random Waypoint model, and then using alternative approaches combining AI-based control with pathfinding. Through comparison it can be clearly observed that the latter improves simulation fidelity significantly.

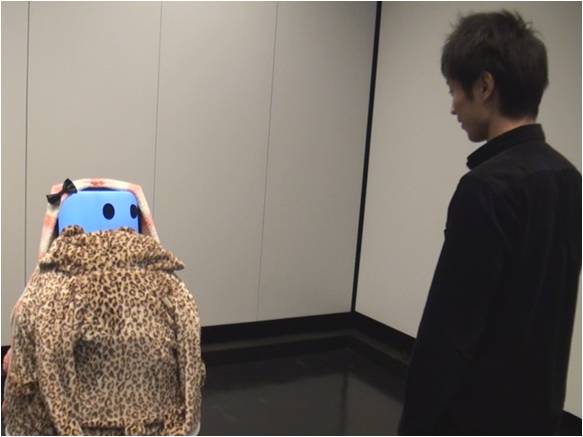

RD-009: Shugo-robot face

- Presenters/Organizers: Presenters: Yuta Murofushi, Ryosuke Tasaki, Natsumi Isobe, Organizers: Kazuhiko Terashima, Michiteru Kitazaki

Visual Psychophysics Lab and Center for Human-robot Symbiosis Research at Toyohashi University of Technology (Japan) - Description: We demonstrate a virtual face of a robot with minimum humanity. The name of the robot is "shugo-robot", which is after "shugo-rei (guardian spirit)". The shugo-robot follows her/his master by using laser sensors, and takes greeting behaviors by gazing to master's friend and others. The head part of the robot has a camera, and finds human faces. Then, the robot's face gazes at a person nearest to the robot. This behavior makes users to believe the robot has an intention to communicate with them. The robot makes blinking at random timings to show the robot is alive.

RD-010: Animal biological motion and its fake motion

- Presenters/Organizers: Presenters: Natsumi Isobe, Michiteru Kitazaki Organizer:Michiteru Kitazaki

Visual Psychophysics Lab and Center for Human-robot Symbiosis Research at Toyohashi University of Technology (Japan) - Description: How is our perception sensitive to quadruped animals' action? We will present point-light displays of real quadruped animal actions and human mimicry of those. Human observers can distinguish the real animal actions from that of human mimicry even with short time presentation (500ms), but the accuracy decreased with inverted (upside down) presentation. Thus, sensitivity of our perception is limited to upright postures of quadruped animals.

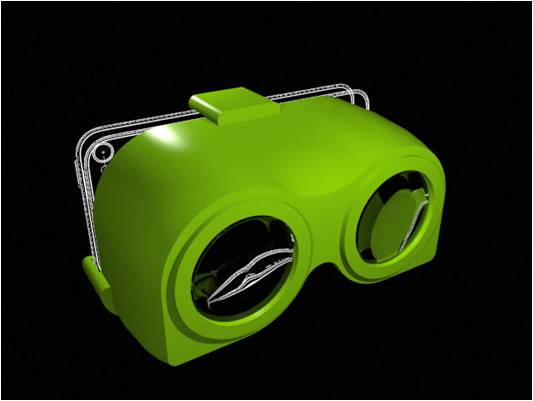

RD-011,012: Open Virtual Reality

- Presenters/Organizers: Mark Bolas†‡, Perry Hoberman‡, Thai Phan†, Palmer Luckey§, James Iliff‡, Nate Burba‡, Ian McDowall¶, David M. Krum†

† USC Institute for Creative Technologies (USA), ‡ USC School of Cinematic Arts (USA), § Oculus VR (USA), ¶ Fakespace Labs (USA) - Description: The ICT Mixed Reality Lab is leveraging an open source philosophy to influence and disrupt industry. Projects spun out of the labs efforts include the VR2GO smartphone based viewer, the inVerse tablet based viewer, the Socket HMD reference design, the Oculus Rift and the Project Holodeck gaming platforms, a repurposed FOV2GO design with Nokia Lumia phones for a 3D user interface course at Columbia University, and the EventLabs Socket based HMD at the University of Barcelona. A subset of these will be demonstrated. This open approach is providing low cost yet surprisingly compelling immersive experiences.

RD-014: Creating 3D Projection on Tangible Objects

- Presenters/Organizers: Pekka Nisula, Jussi Kangasoja

Oulu University of Applied Sciences (Finland) - Description: Our work explores the possibilities of using 3D projection on physical objects a multi-projector set-up, which we aim to use for exhibition, teaching, design and early prototyping purposes. We also aim to create more immersive and engaging multimedia presentations, and change from conventional 2D media to 3D media by projecting the visual content on a physical object. In our demonstration we used a sphere shaped object and projected a visualization of rotating Earth on it with five projectors. In the future we will focus on enabling functional interactions to make projections more useful and interesting e.g. for teaching purposes.

RD-015: Immersive Virtual Reality On-The-Go

- Presenters/Organizers: Aryabrata Basu, Kyle Johnsen

Virtual Experiences Laboratory at University of Georgia (USA) - Description: We propose to demonstrate a ubiquitous immersive virtual reality system that is highly scalable and accessible to a larger audience. Latest trends in mobile communications as well as the entertainment industry seem to validate that, accessibility and scalability play an important role in democratizing technology. We present a practical design of such a system that offers the core affordances of immersive virtual reality in a portable and untethered configuration. In addition, we have developed an extensive immersive virtual experience that involves engaging users visually and aurally. This is an effort towards integrating VR into the space and time of user workflows.

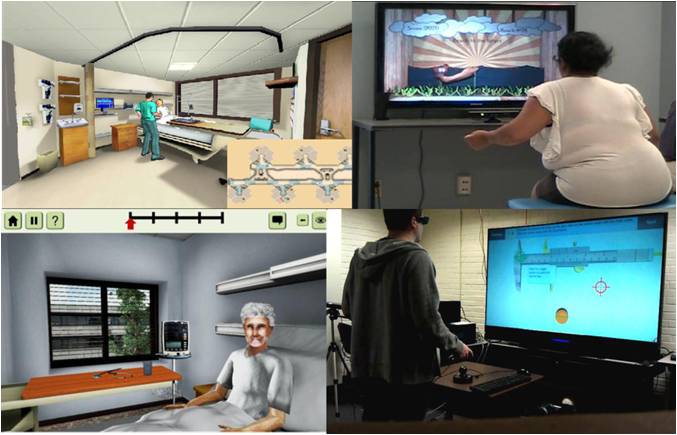

RD-016,017,018,019: Serious Games for Training, Rehabilitation, and Workforce Development

- Presenters/Organizers: Jeffrey Bertrand, Lauren Cairco Dukes, Patrick Dukes, Elham Ebrahimi, Austen Hayes, Naja Mack, Jerome McClendon, Dhaval Parmar, Toni Bloodworth Pence, Blair Shannon, Aliceann Wachter, Yanxiang Wu, Sabarish Babu, Larry F. Hodges

School of Computing at Clemson University (USA) - Description: We present a set of demonstrations of current work in the Clemson University Virtual Environments Group involving the development and testing of interactive virtual environments for applications in training, rehabilitation and workforce development. All of these applications are designed to leverage commodity hardware such as HDTVs, game controllers, and the Kinect sensor.